From SQL database analytics, .NET content management web applications, custom XML databases, data-mining, cleanup, and extraction, I have built a number of innovative Web Applications involving data and management of that data online.

WEB CONTENT MANAGEMENT SYSTEMS (CMS)

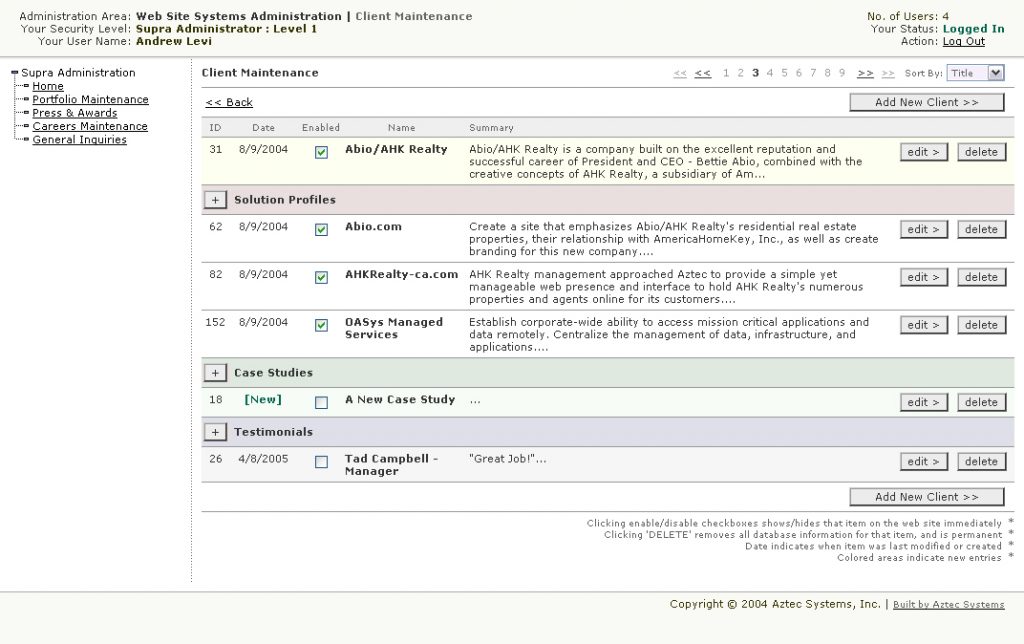

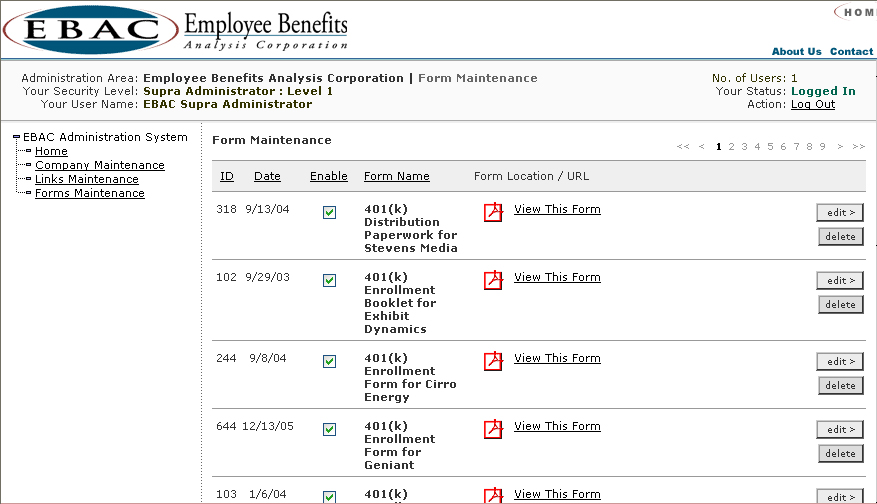

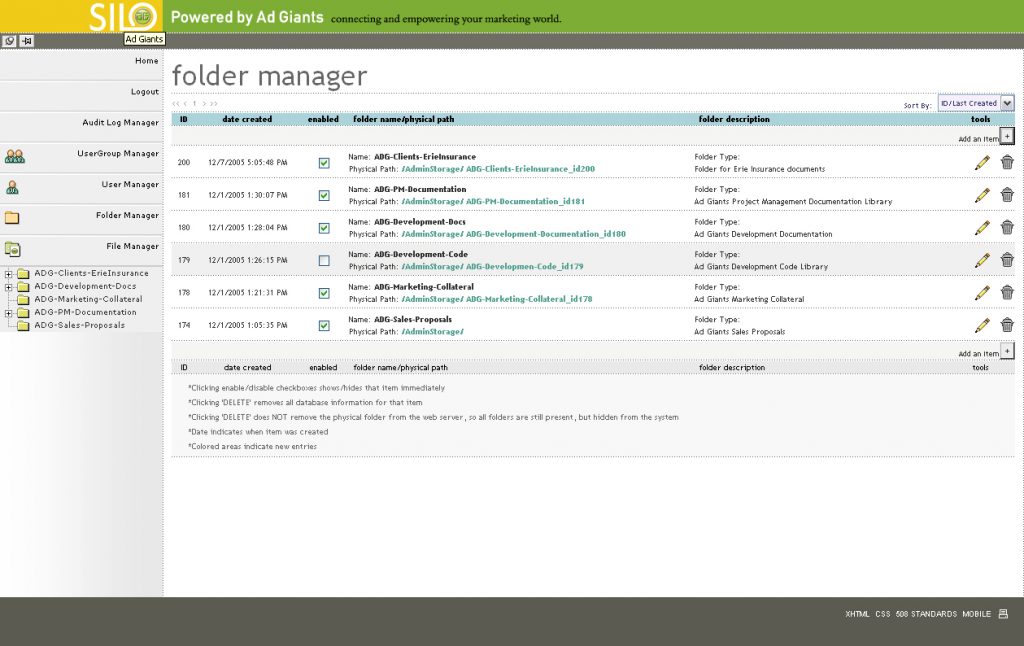

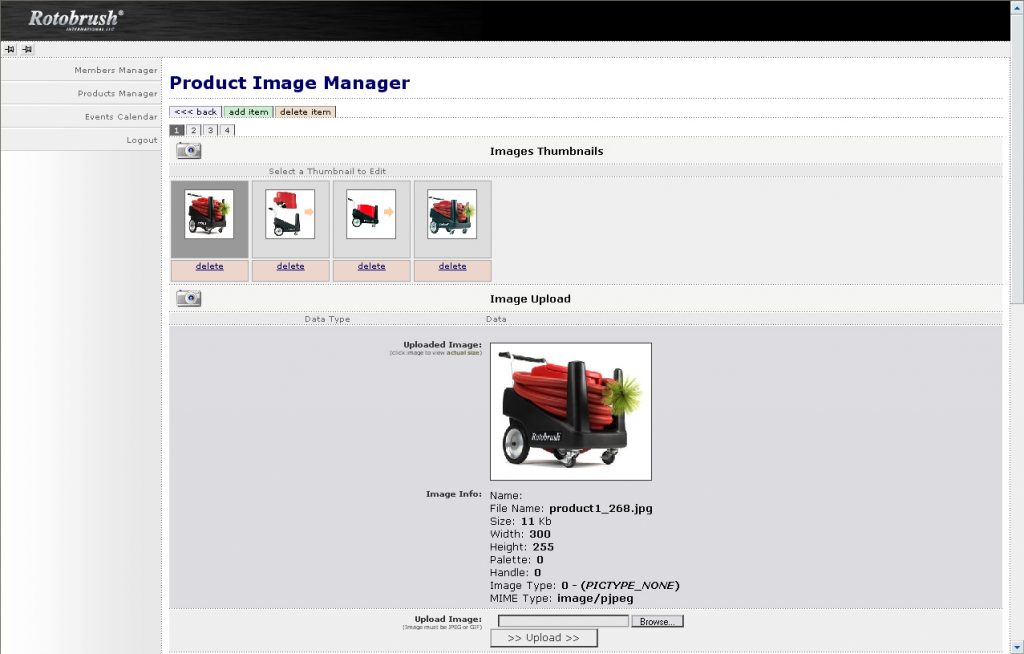

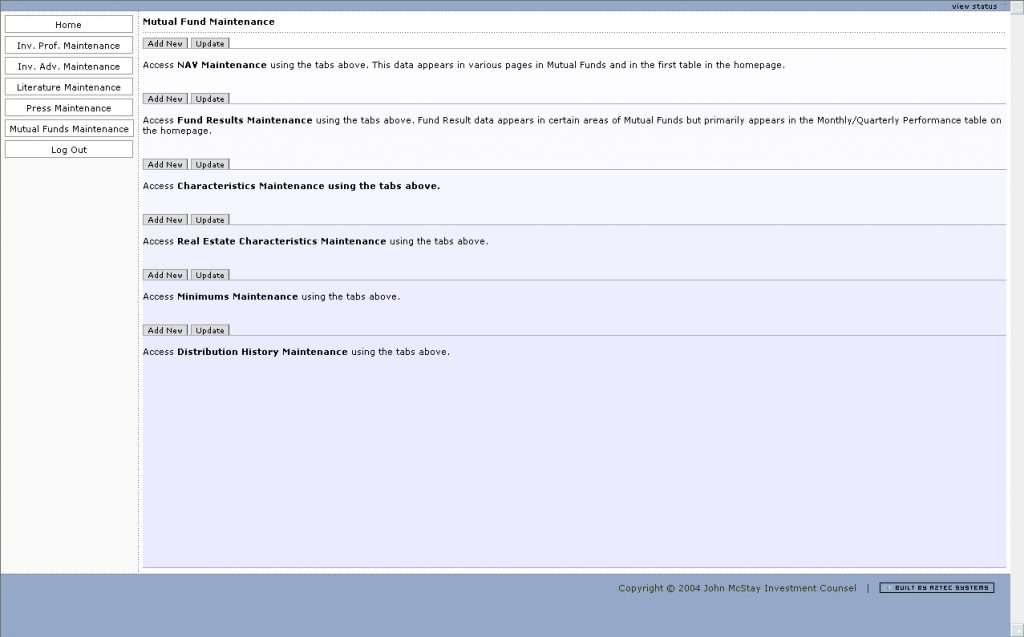

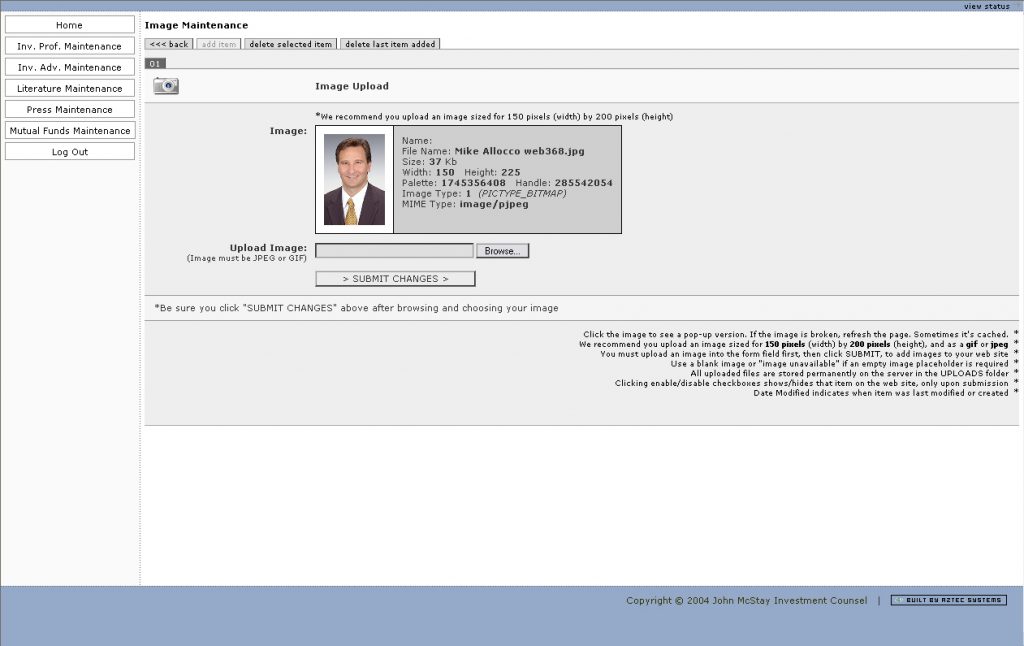

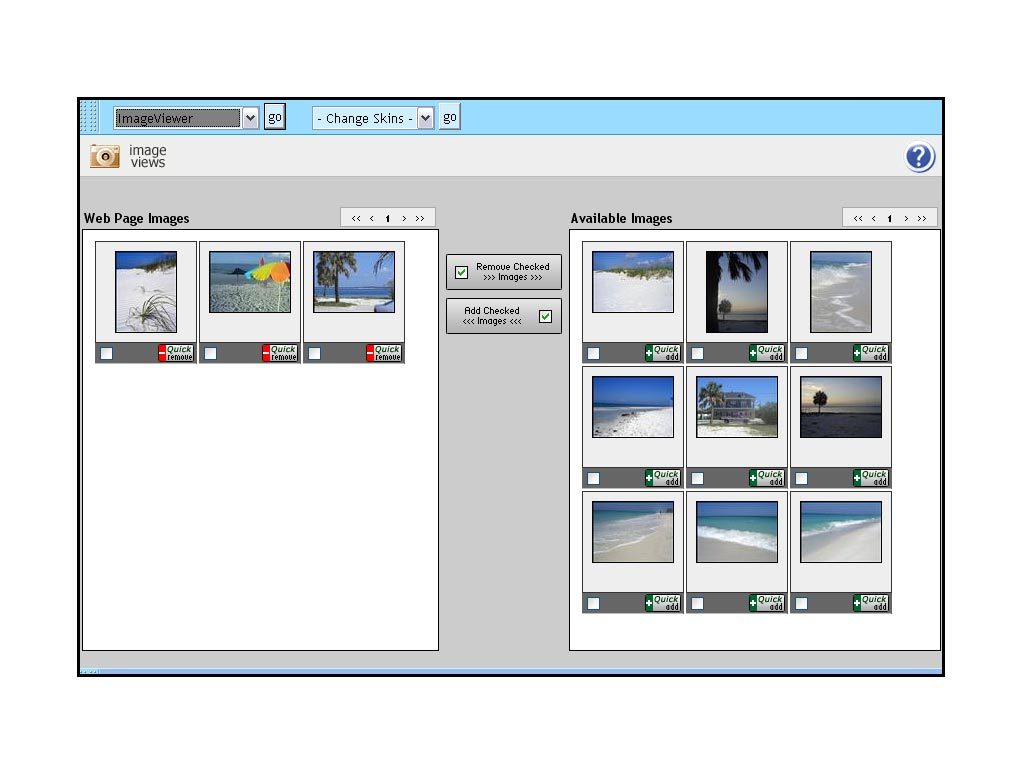

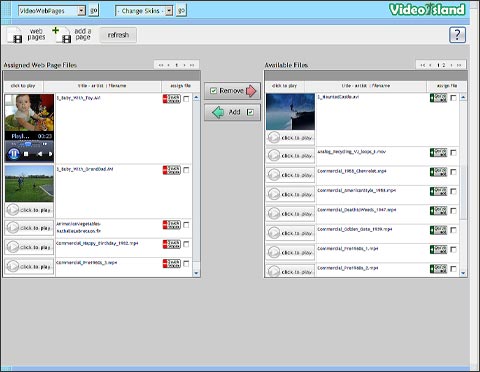

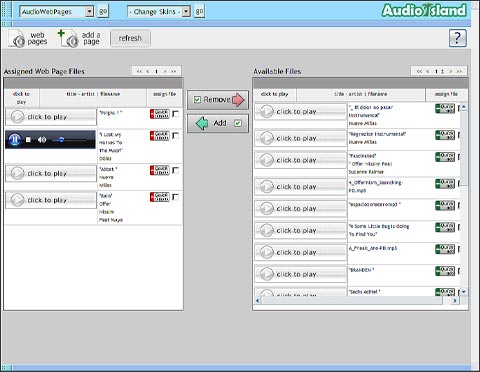

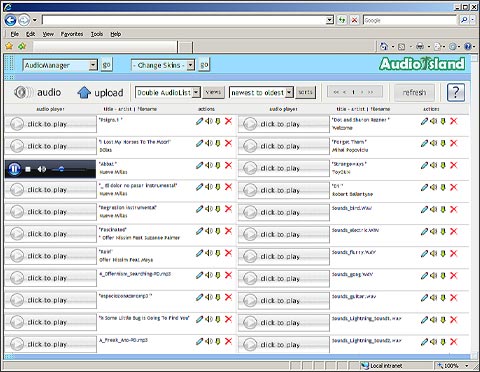

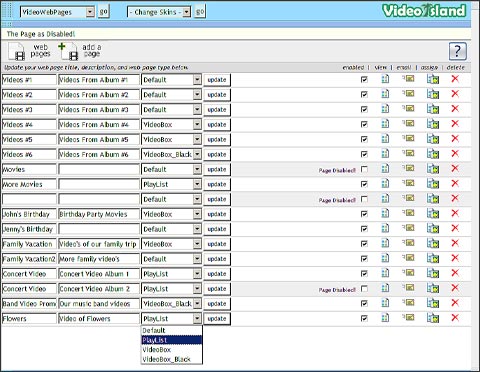

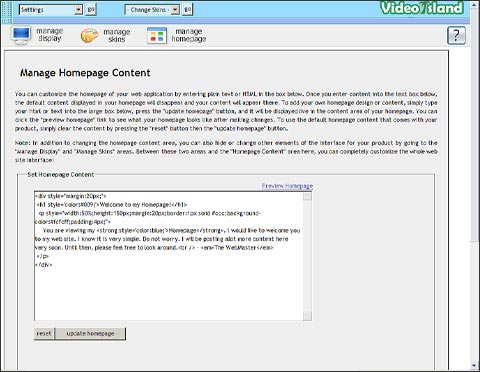

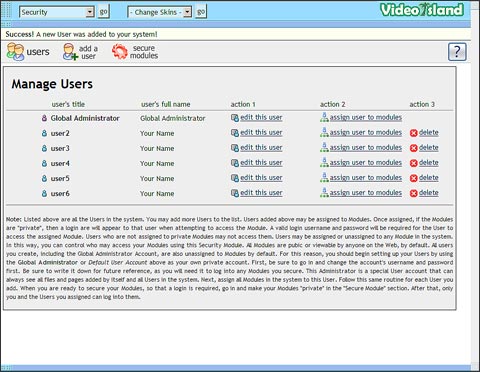

Most of what runs the World Wide Web are what I call public-facing websites, the “pretty stuff”. But most people do not realize that most websites are supported on the back end by very complex and elaborate content management systems. I have built many of these over the years for clients. But unlike most developers, I build the complete system, front-to-back, including the databases, server-side application code, and front end user interfaces (UI).

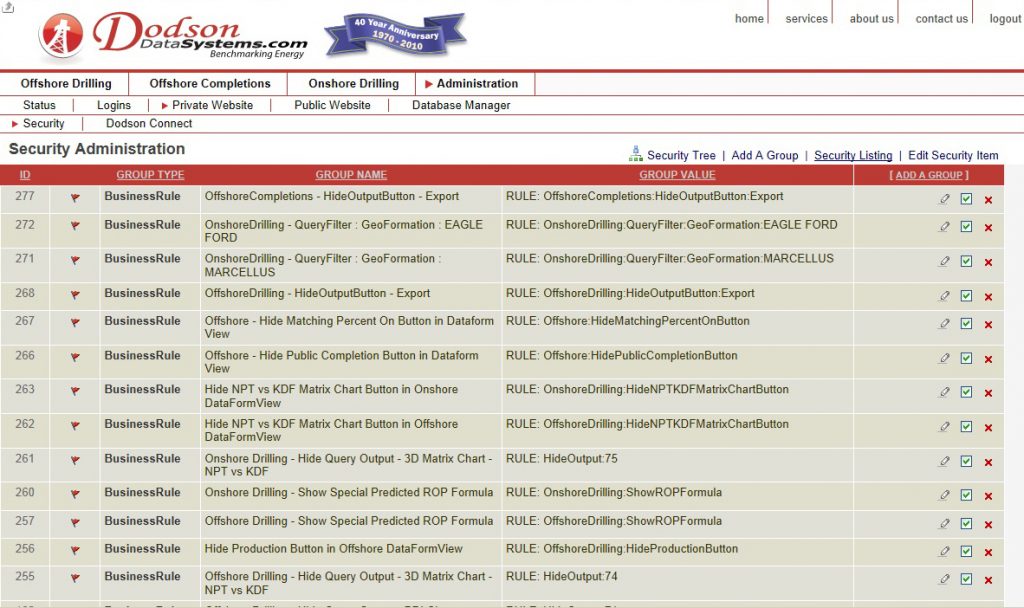

These private portals require a unique type of developer…one able to understand the intricacies of good web development and how data flows and is used in online systems. The customers that use these online management portals, the administrators, need secure access to these tools. So building CMS apps requires knowledge of their needs, of security, of architecture, of simplifying complex data management, of interface design, and of rich coding languages and frameworks, such as C#, .NET, JAVA, and PHP. Below are just a few of the CMS pieces I have designed over the years. Again, I designed the interfaces, the HTML, scripting, data schemas, and C#/ASP compiled or interpreted assemblies that run them.

SQL DATA ACCESS, ANALYSIS, AND QUERY SYSTEMS

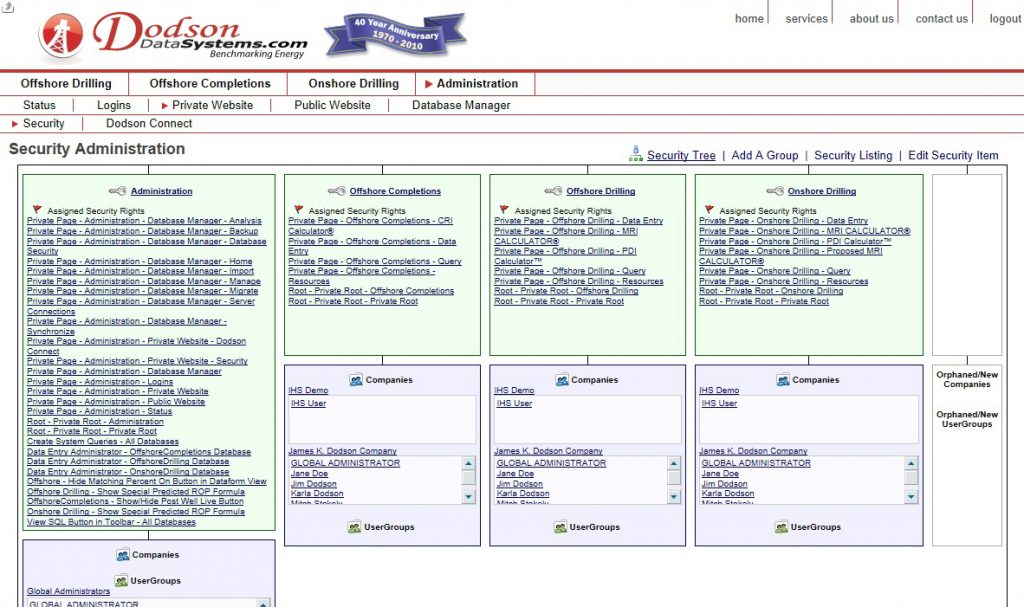

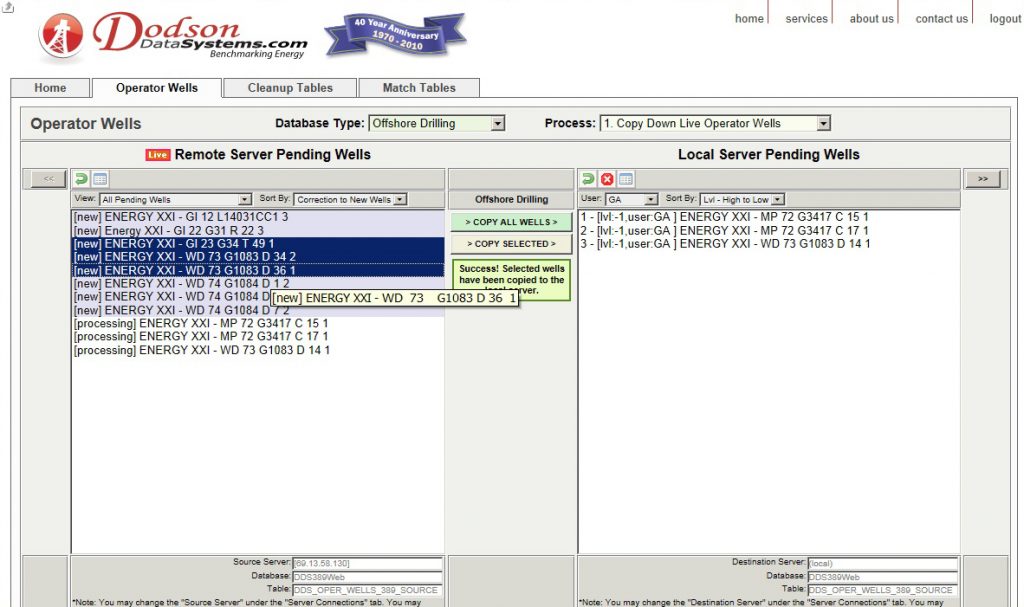

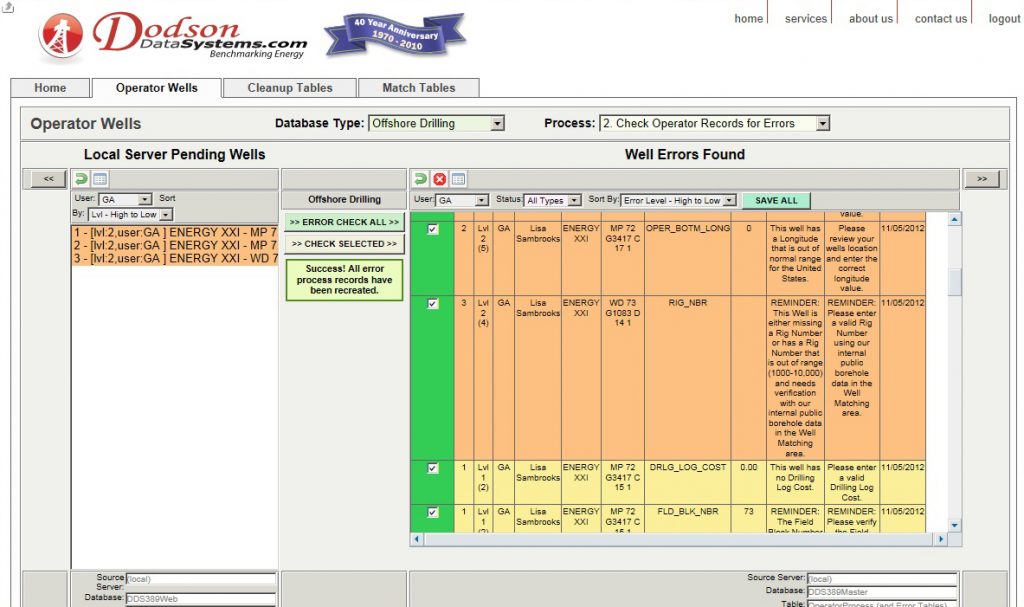

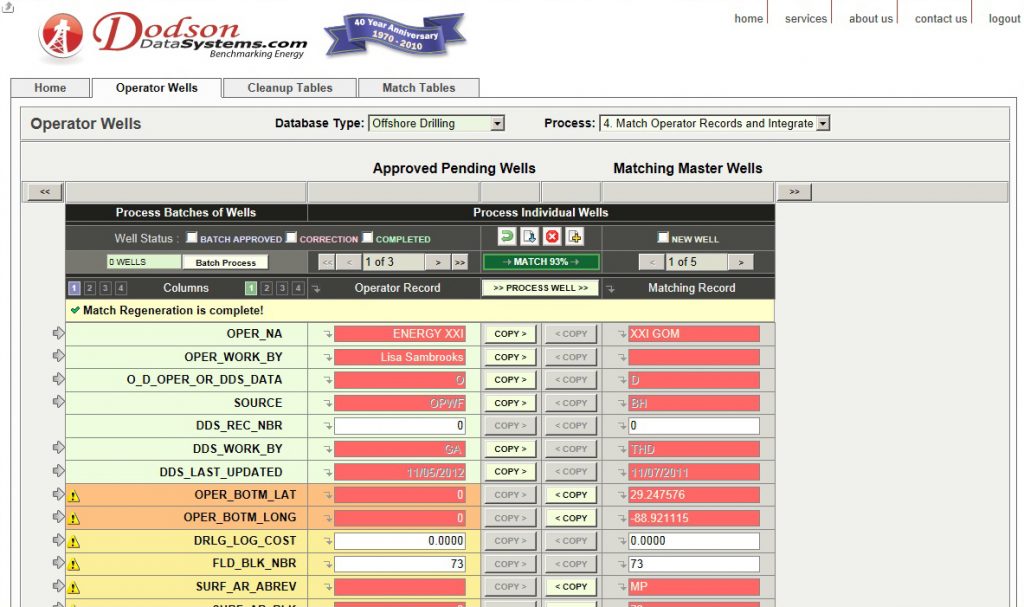

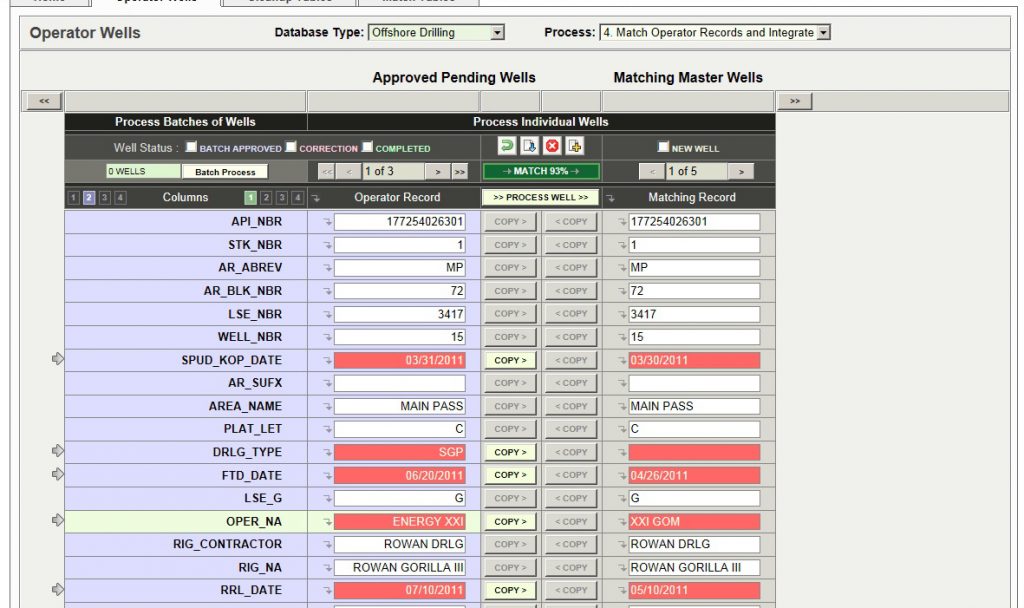

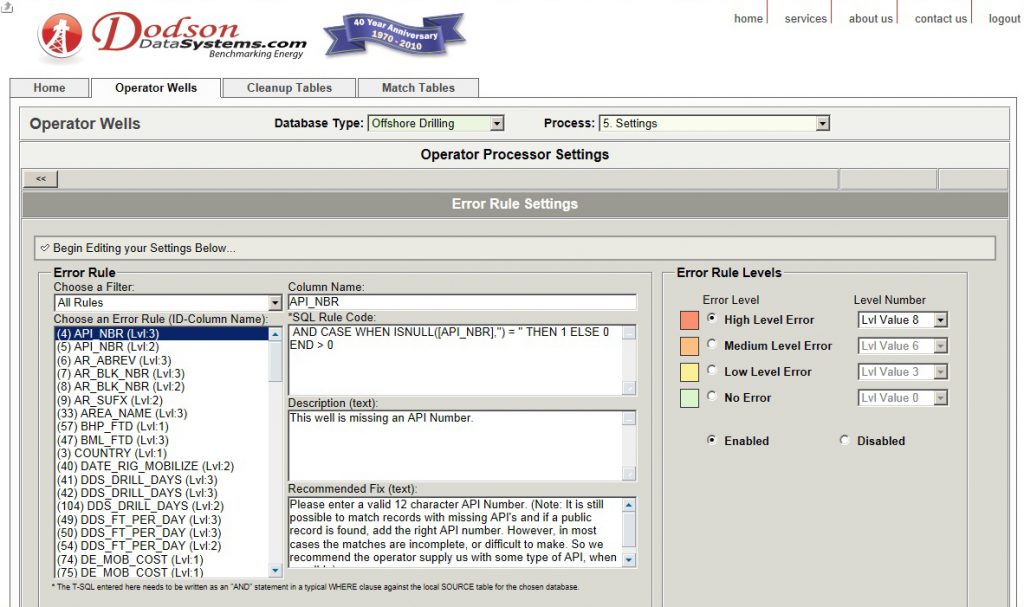

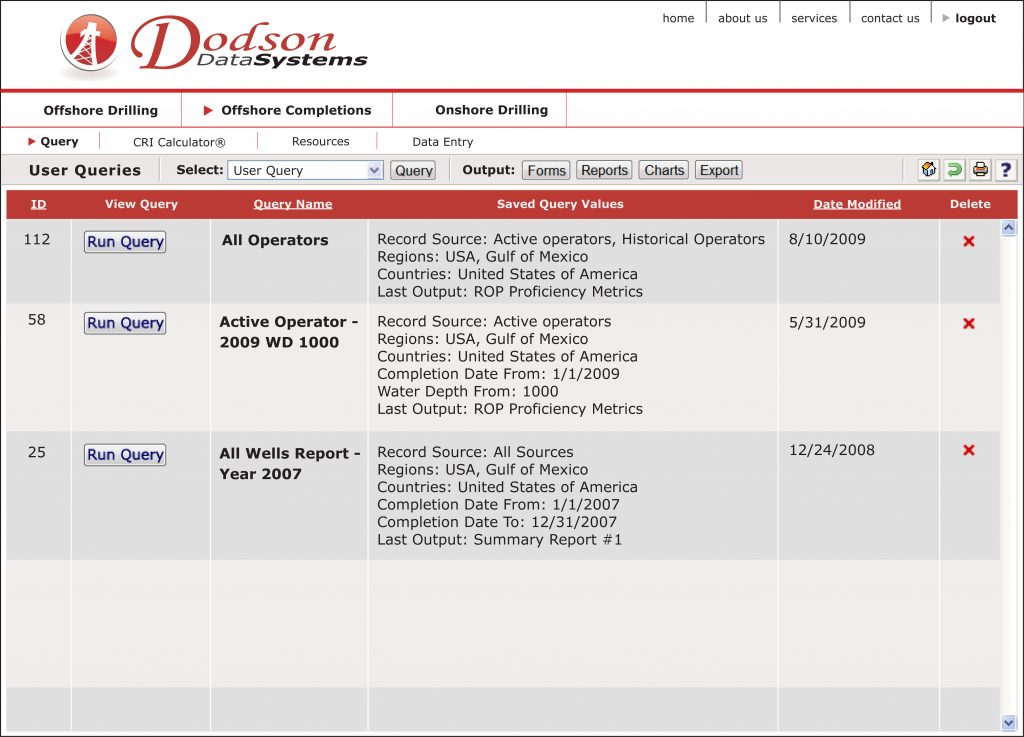

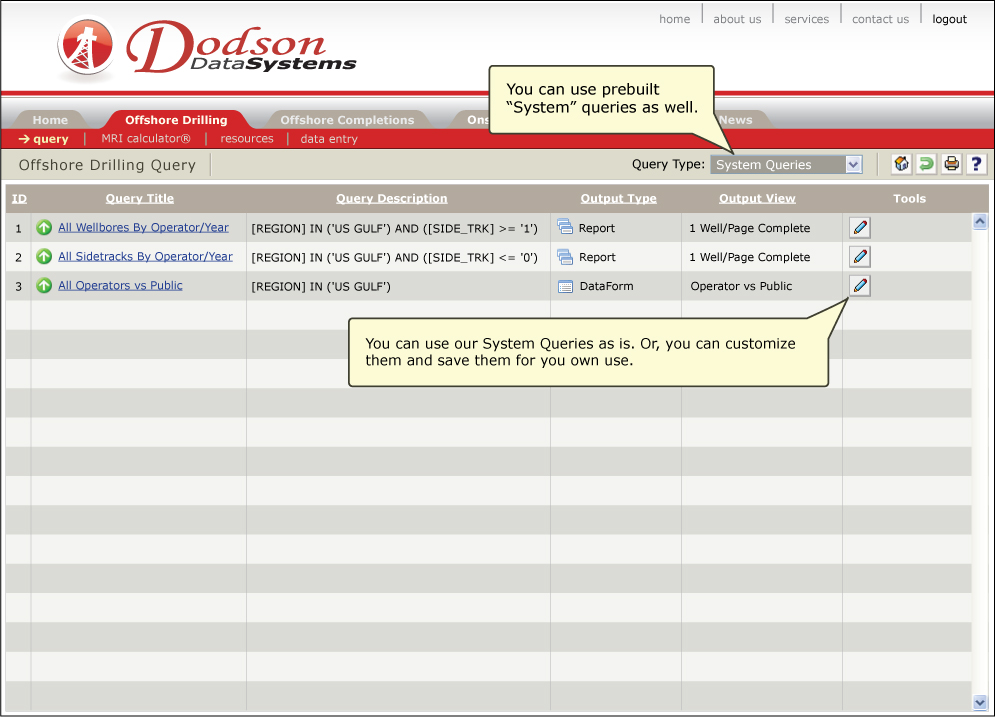

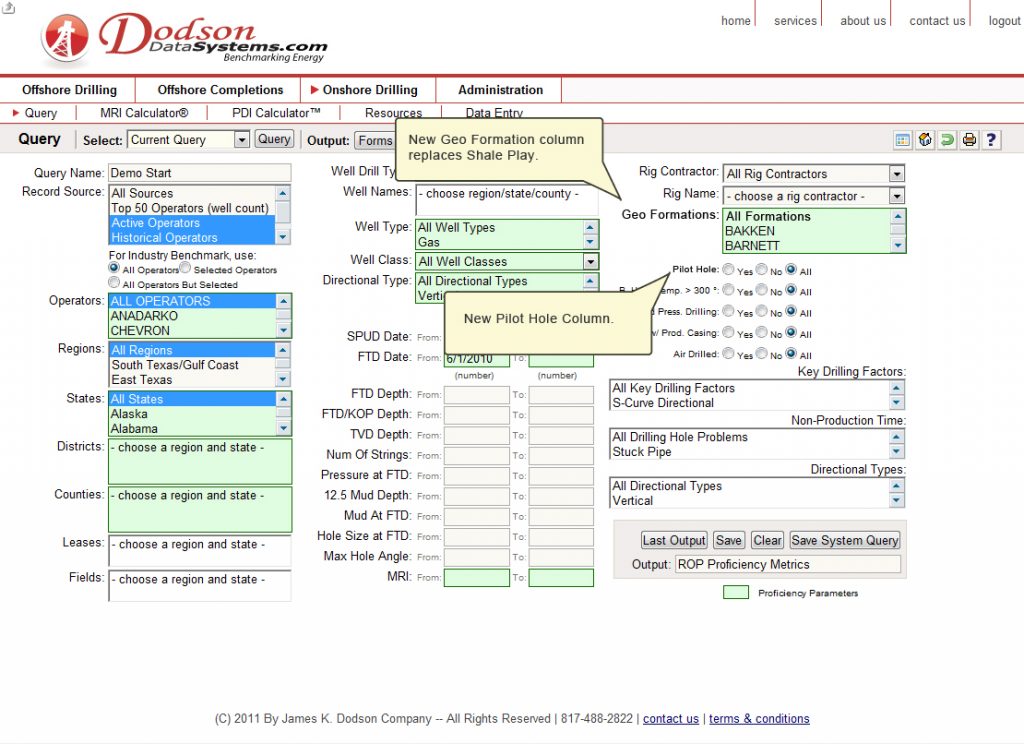

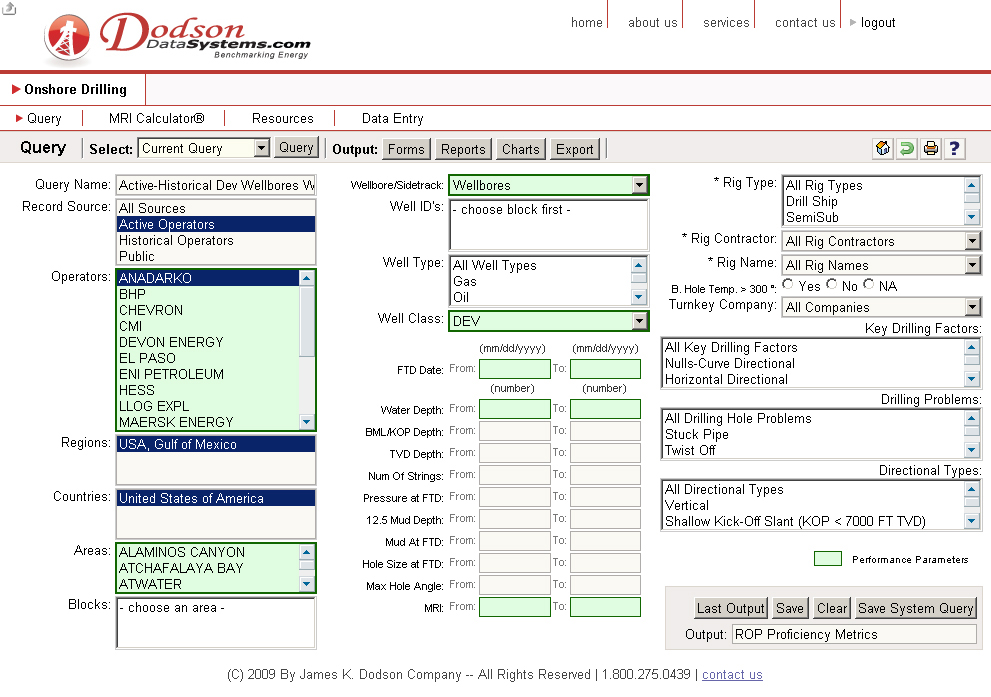

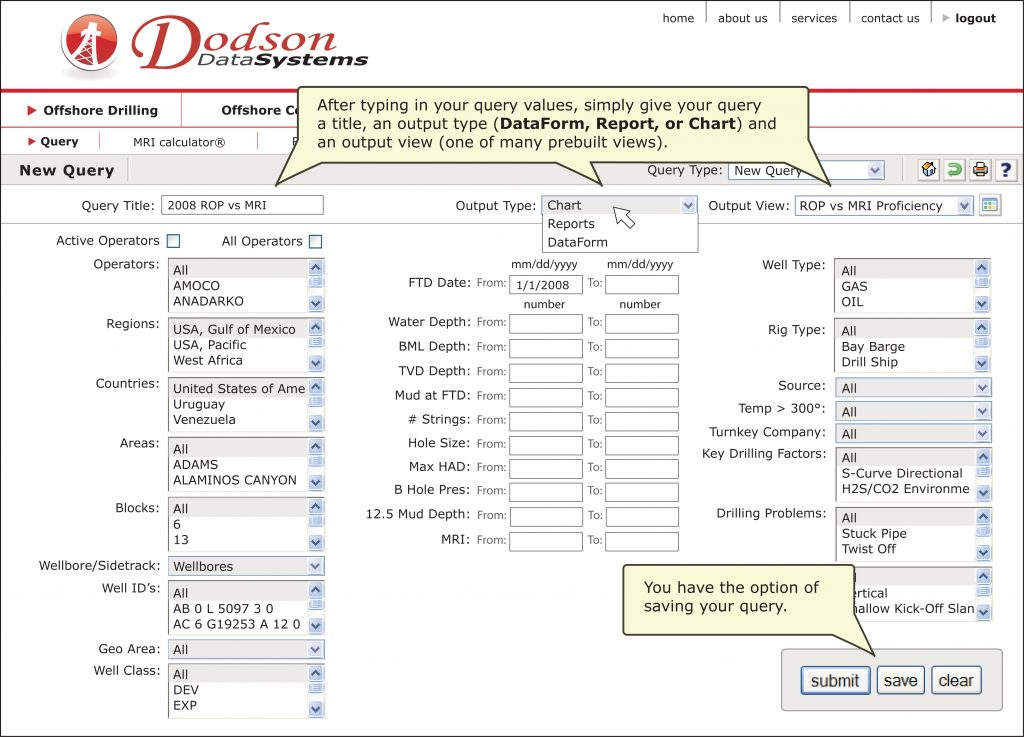

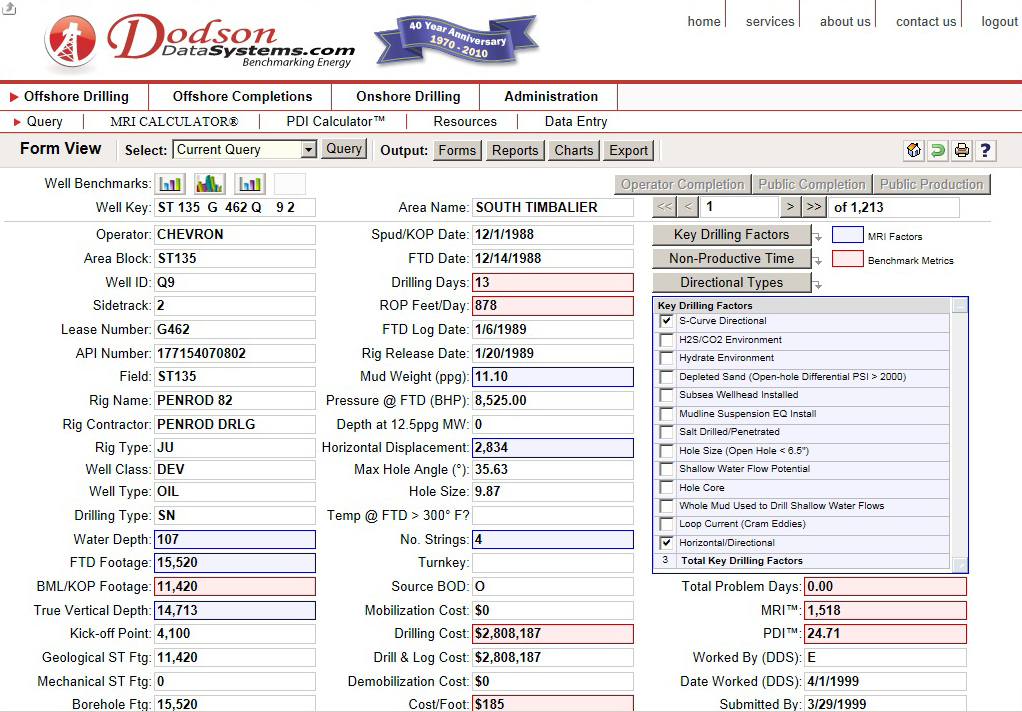

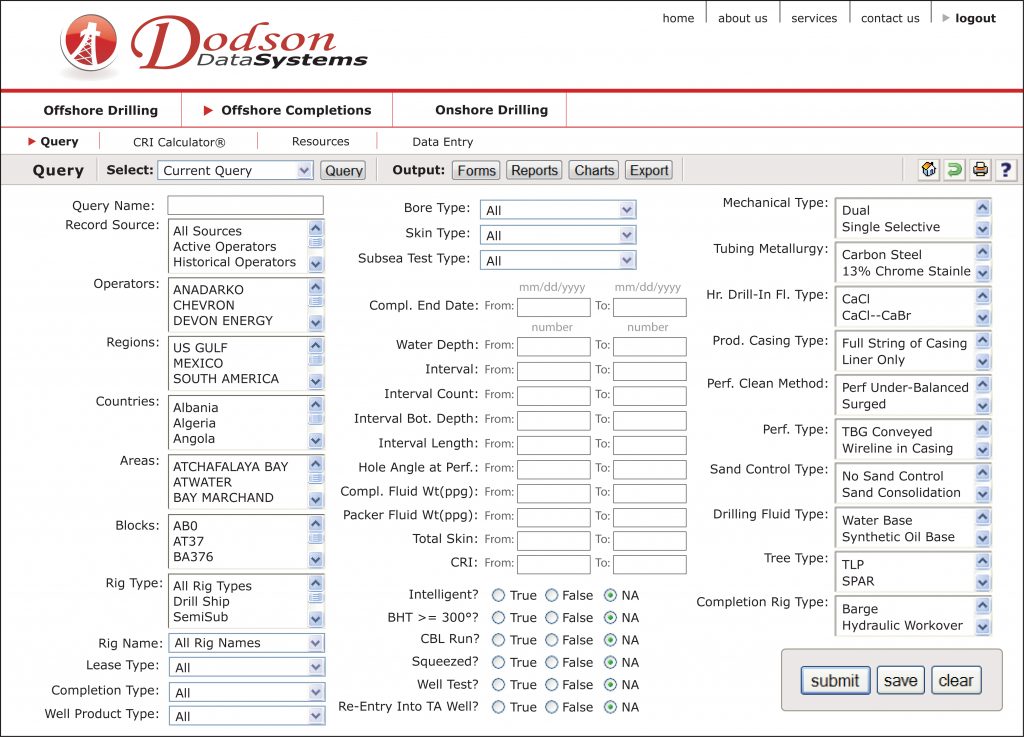

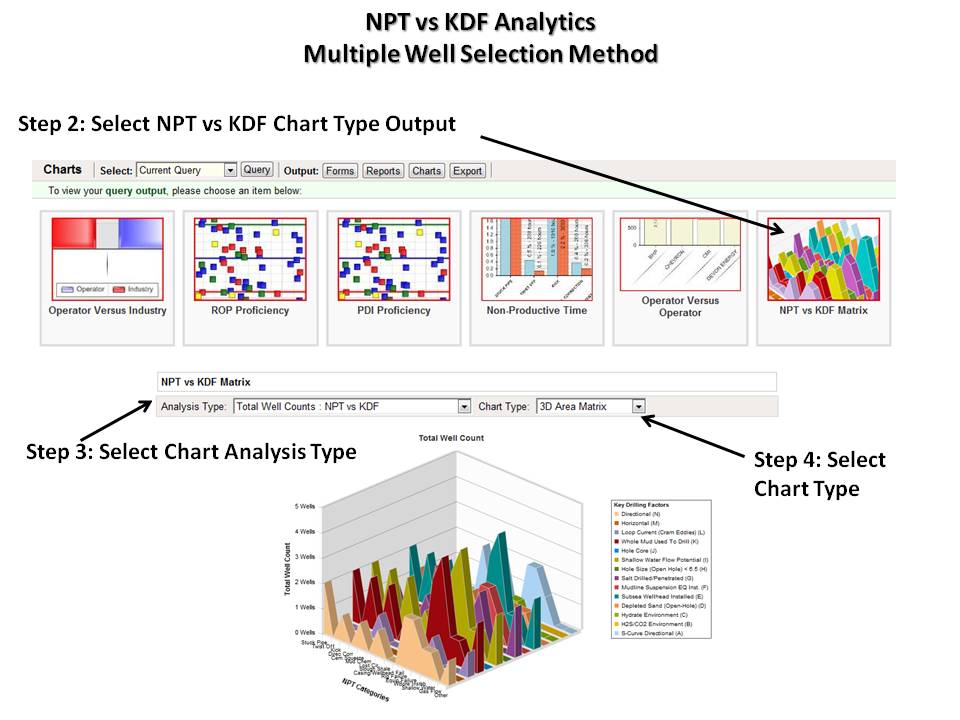

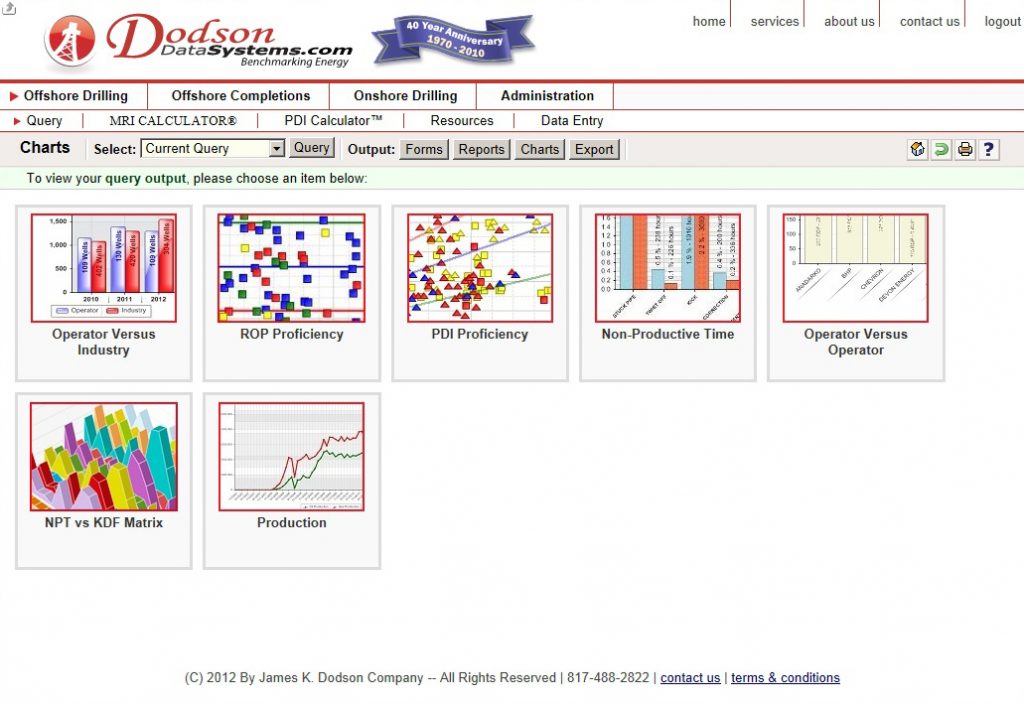

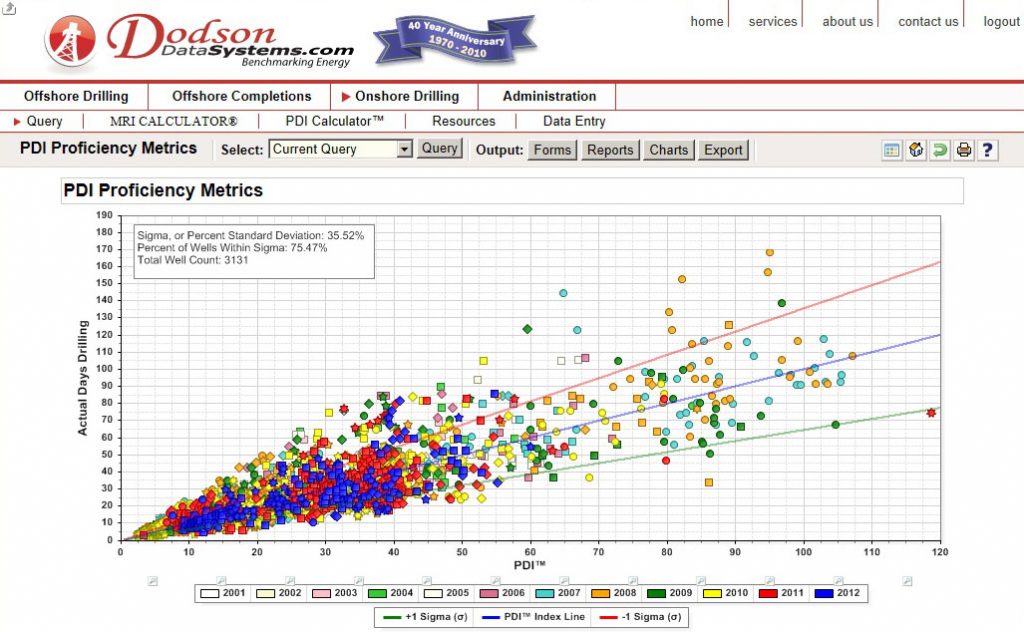

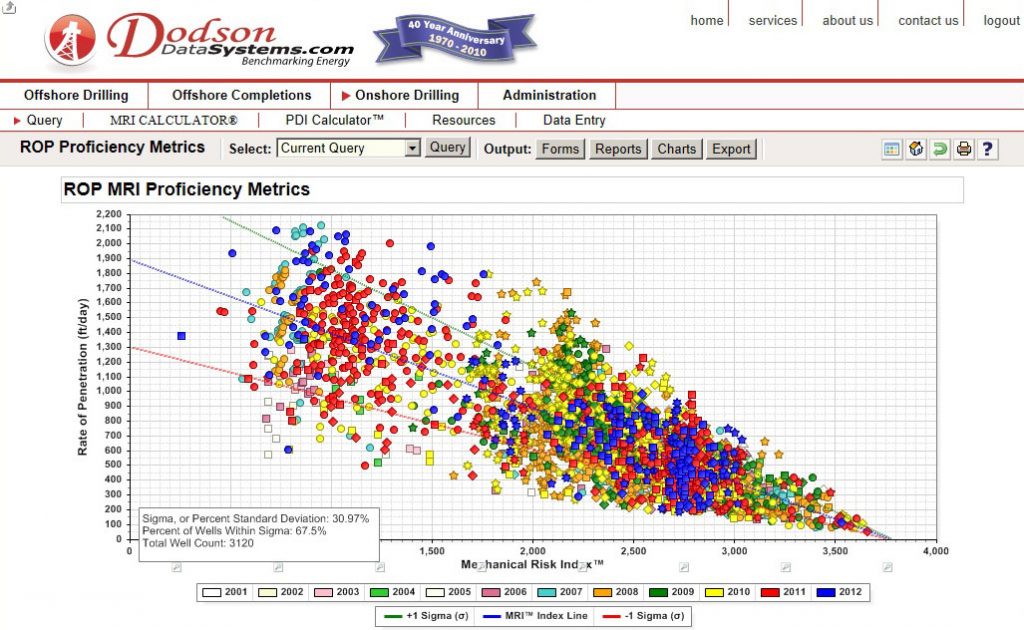

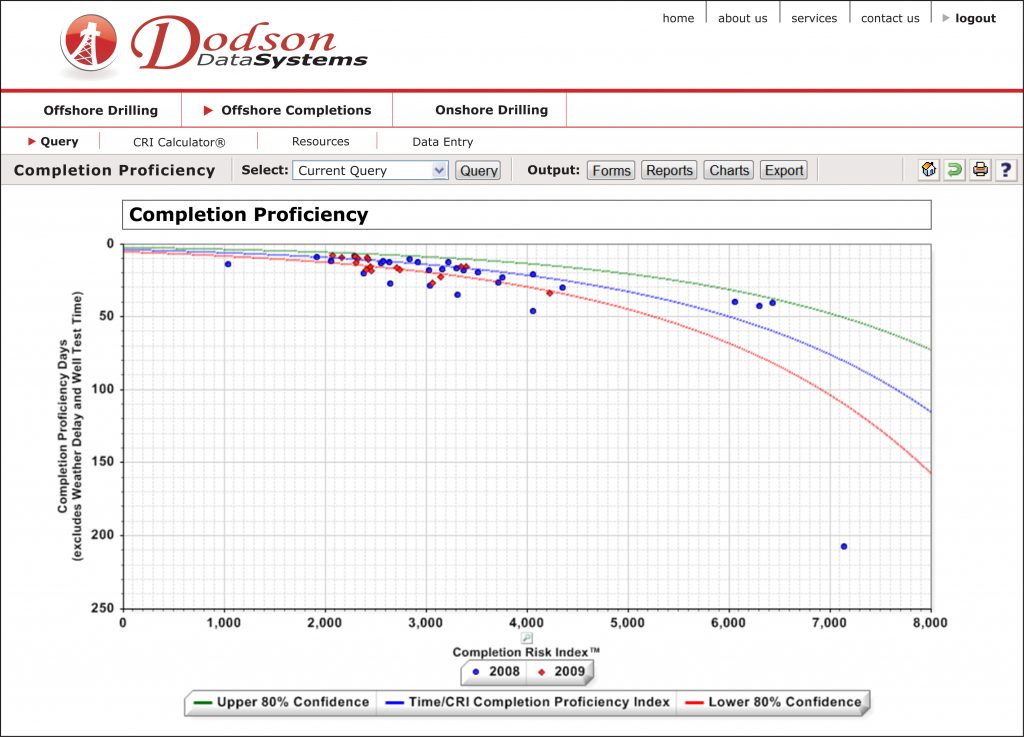

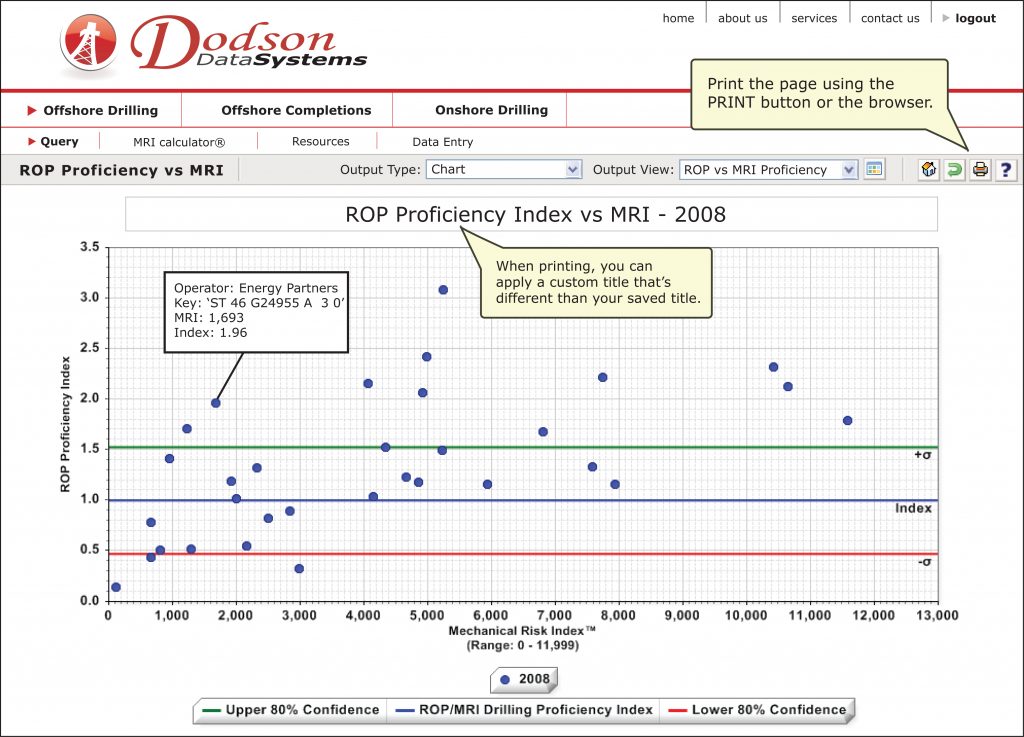

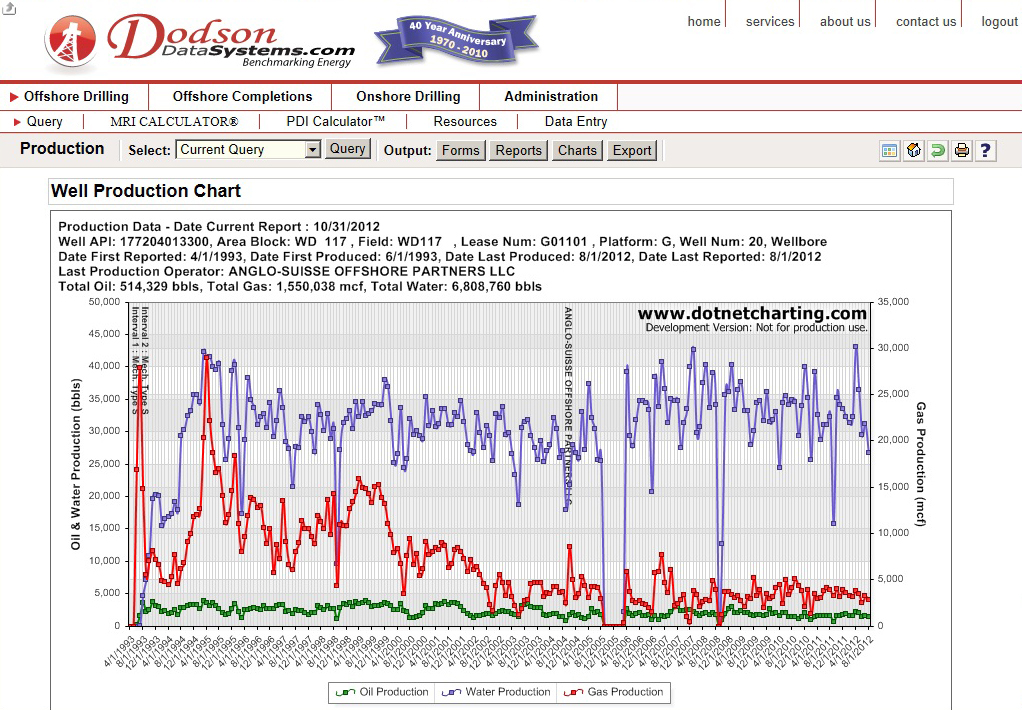

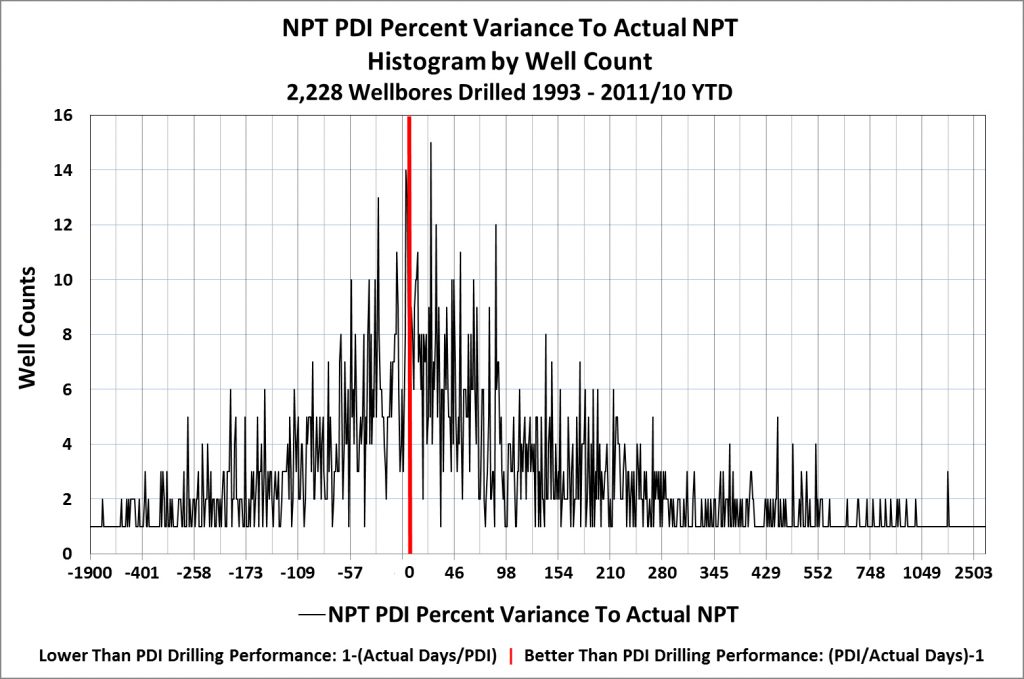

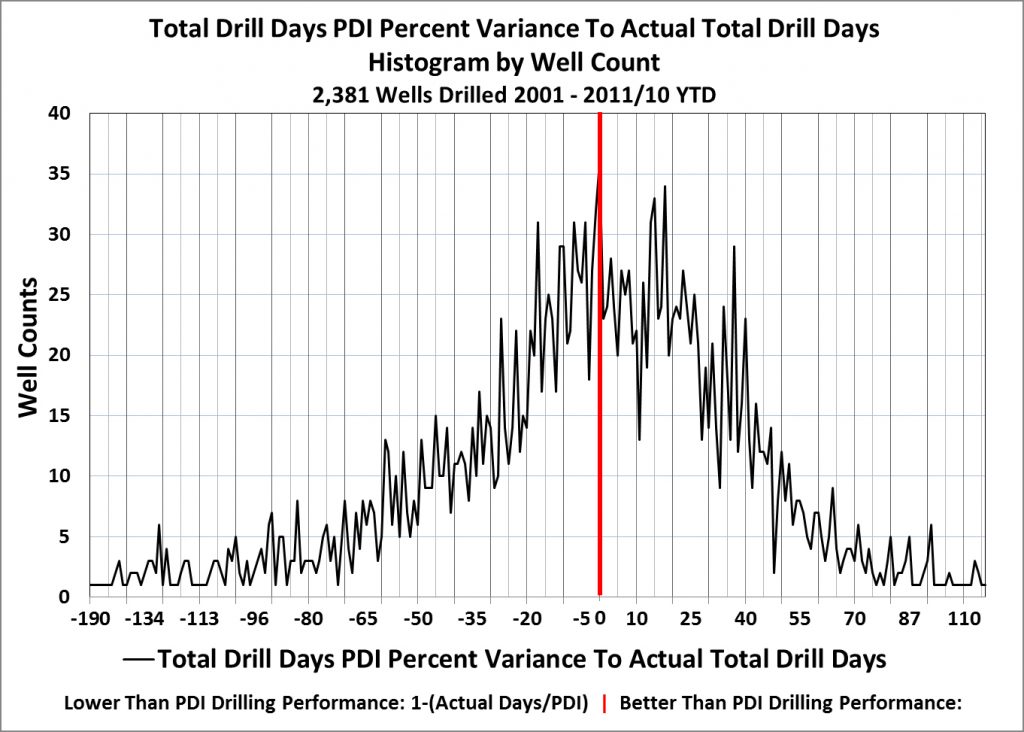

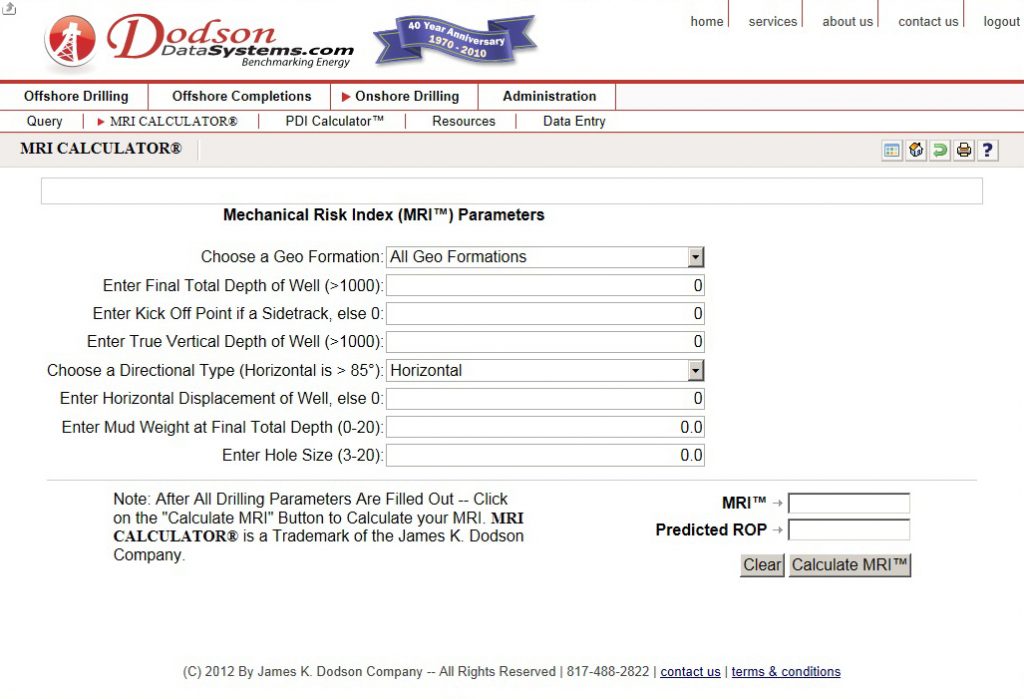

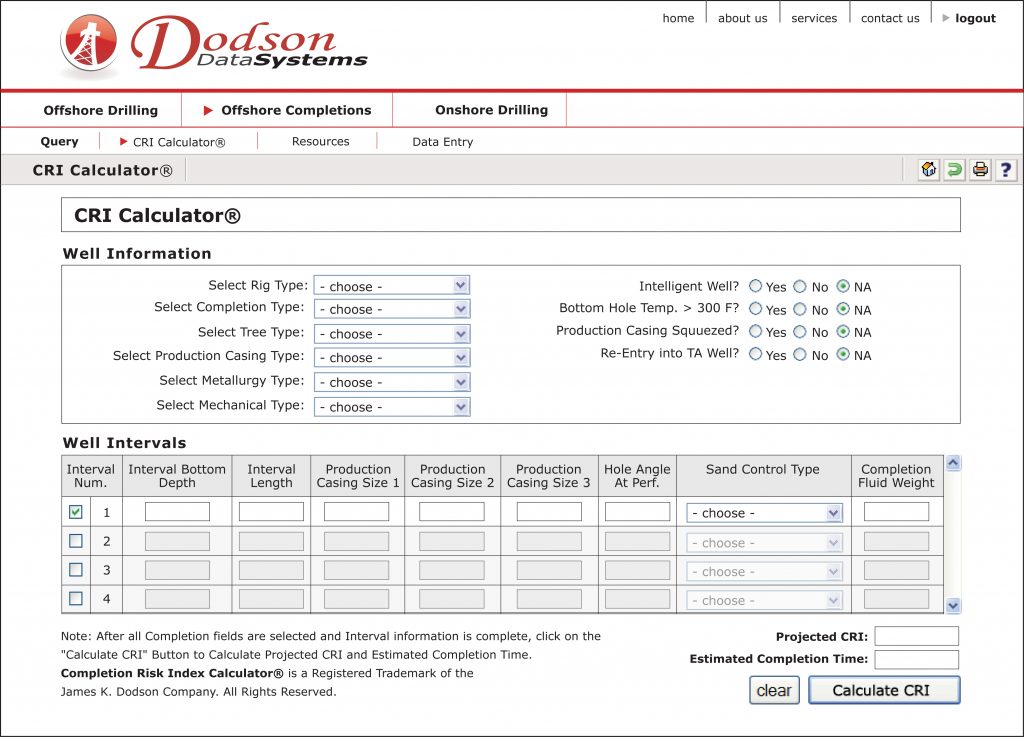

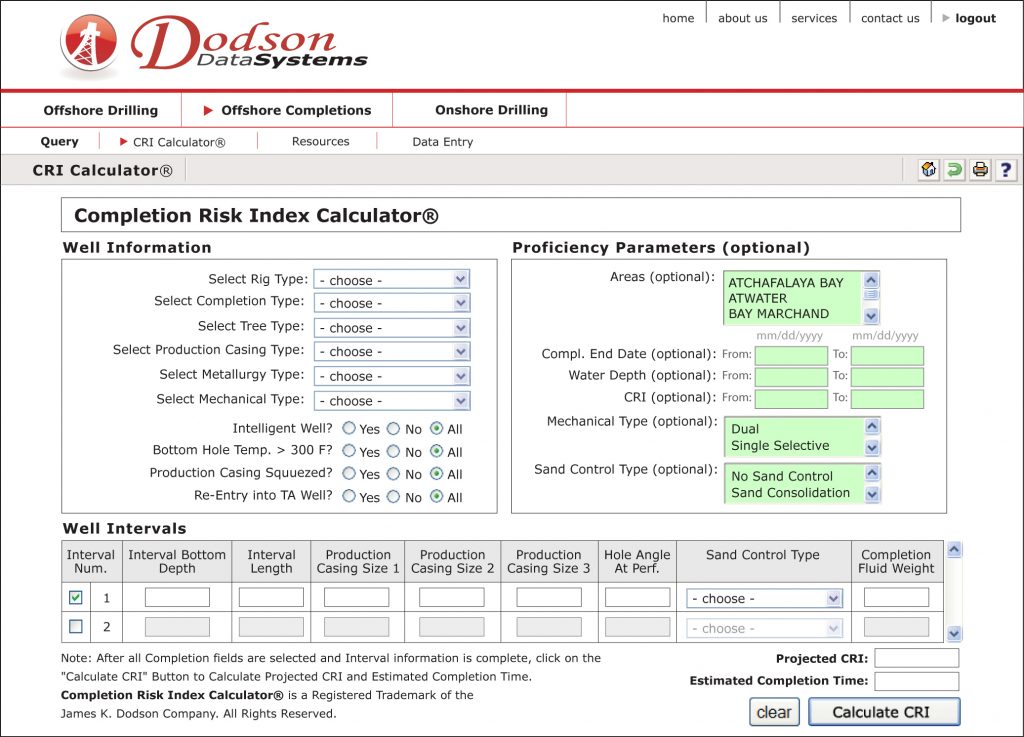

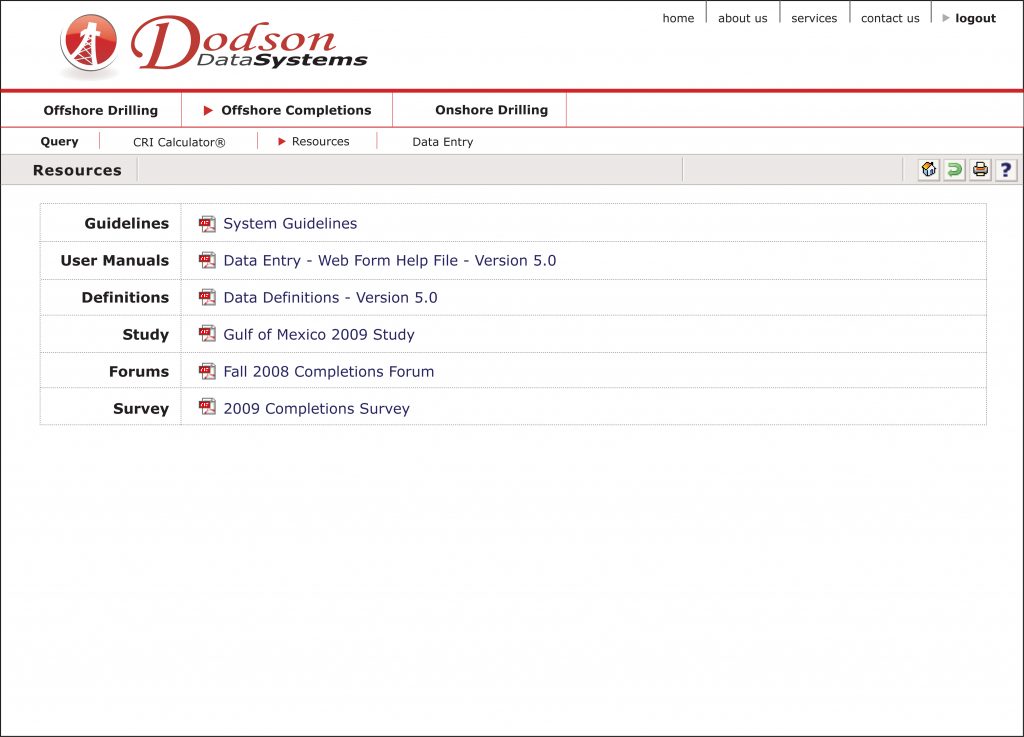

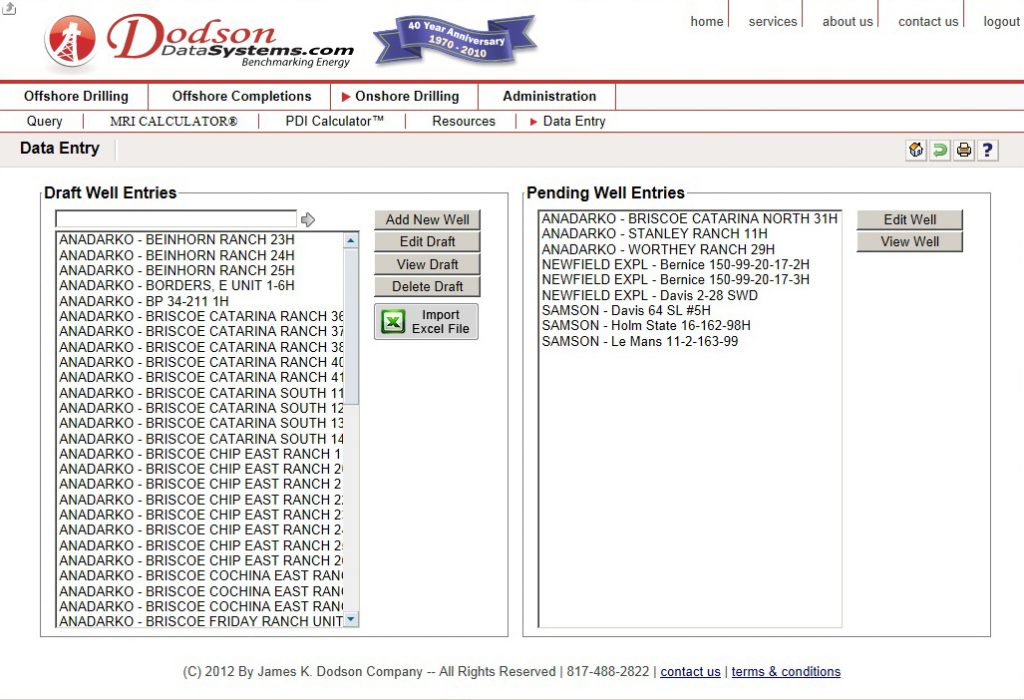

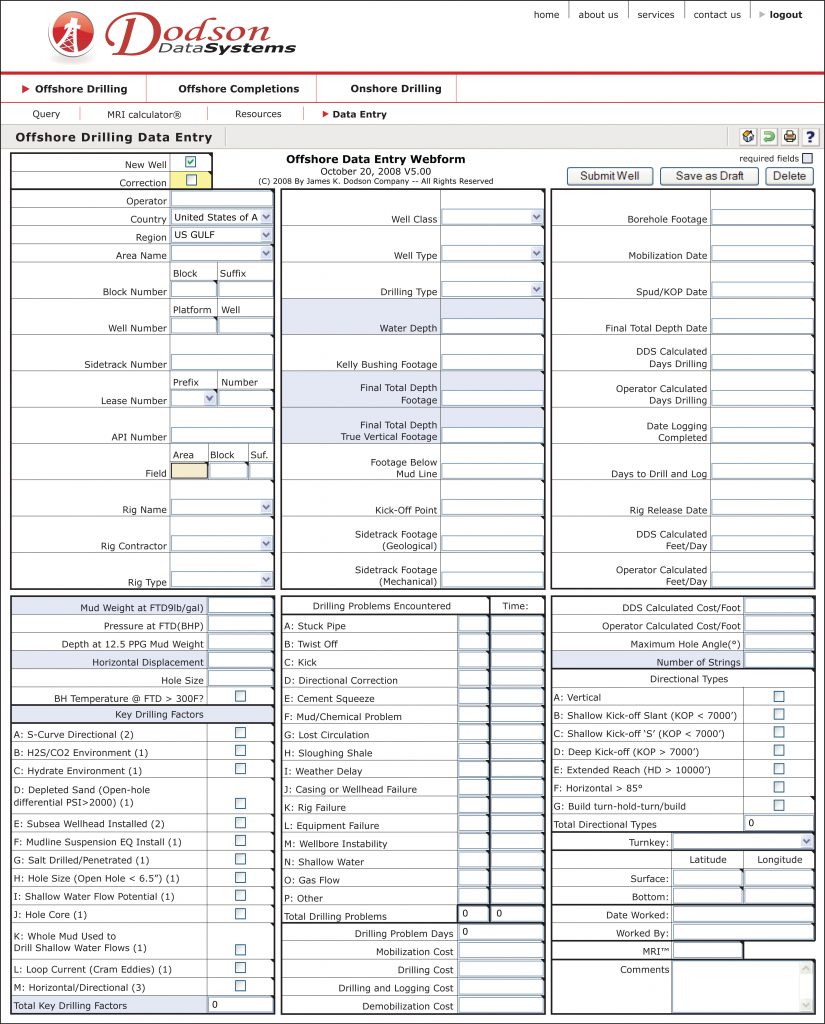

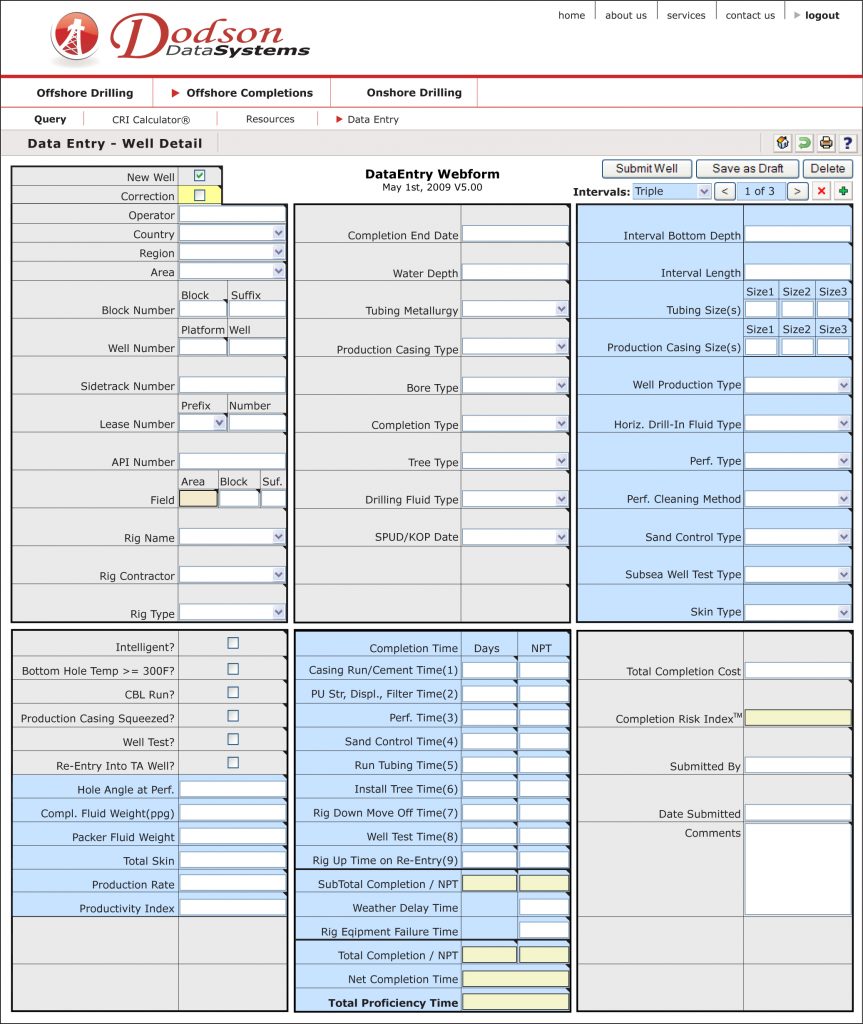

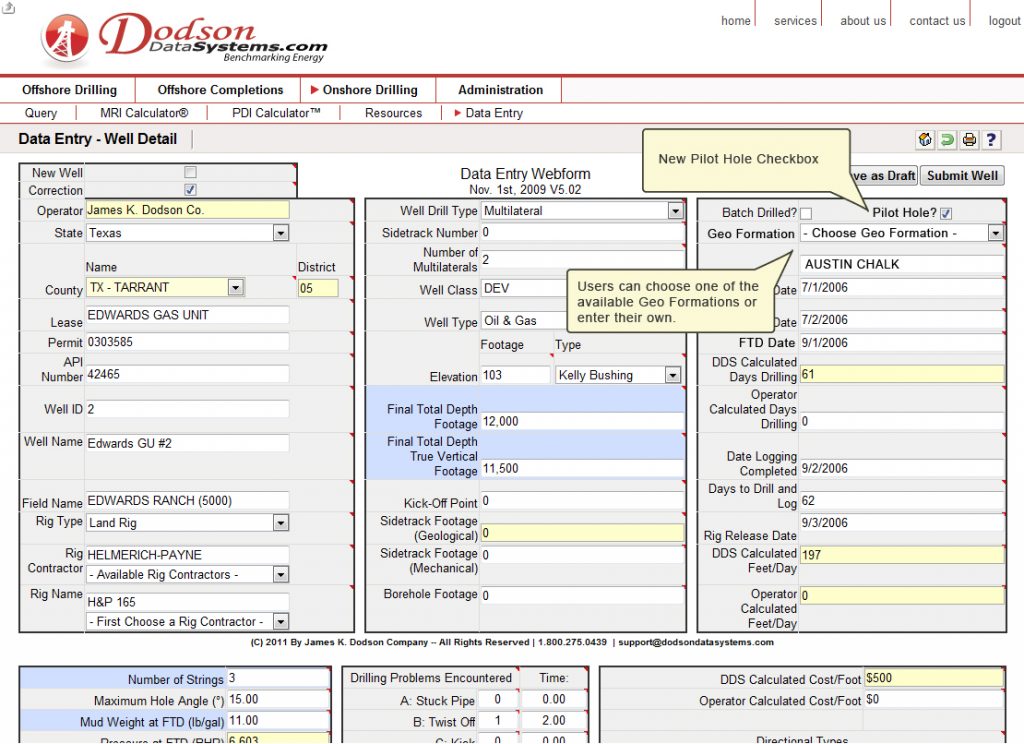

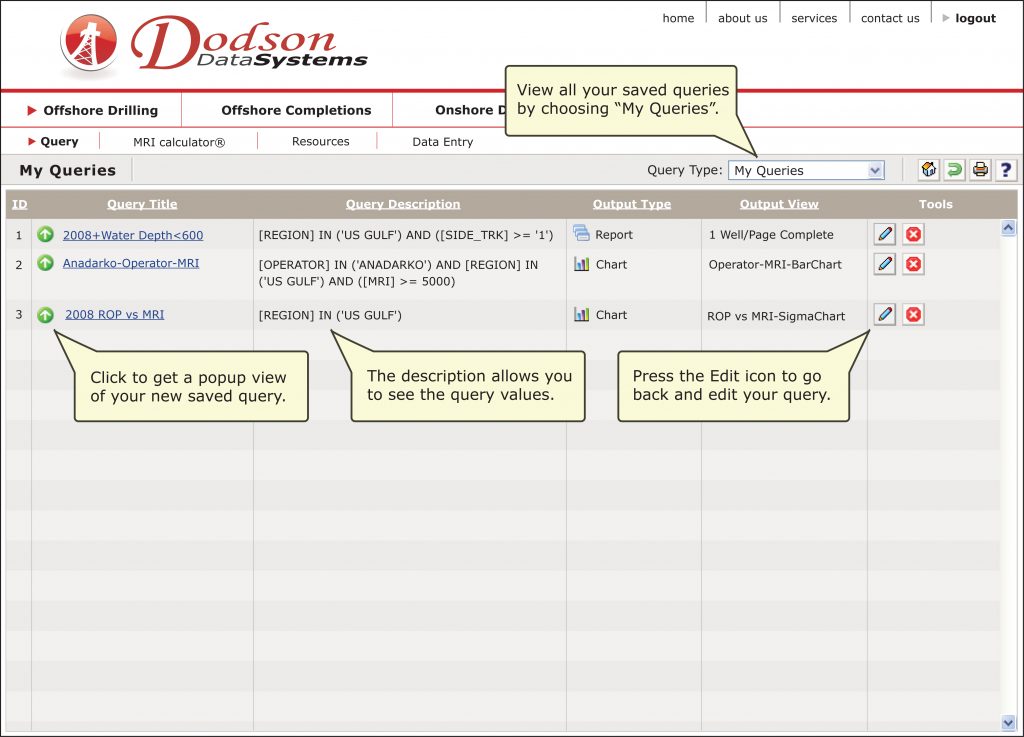

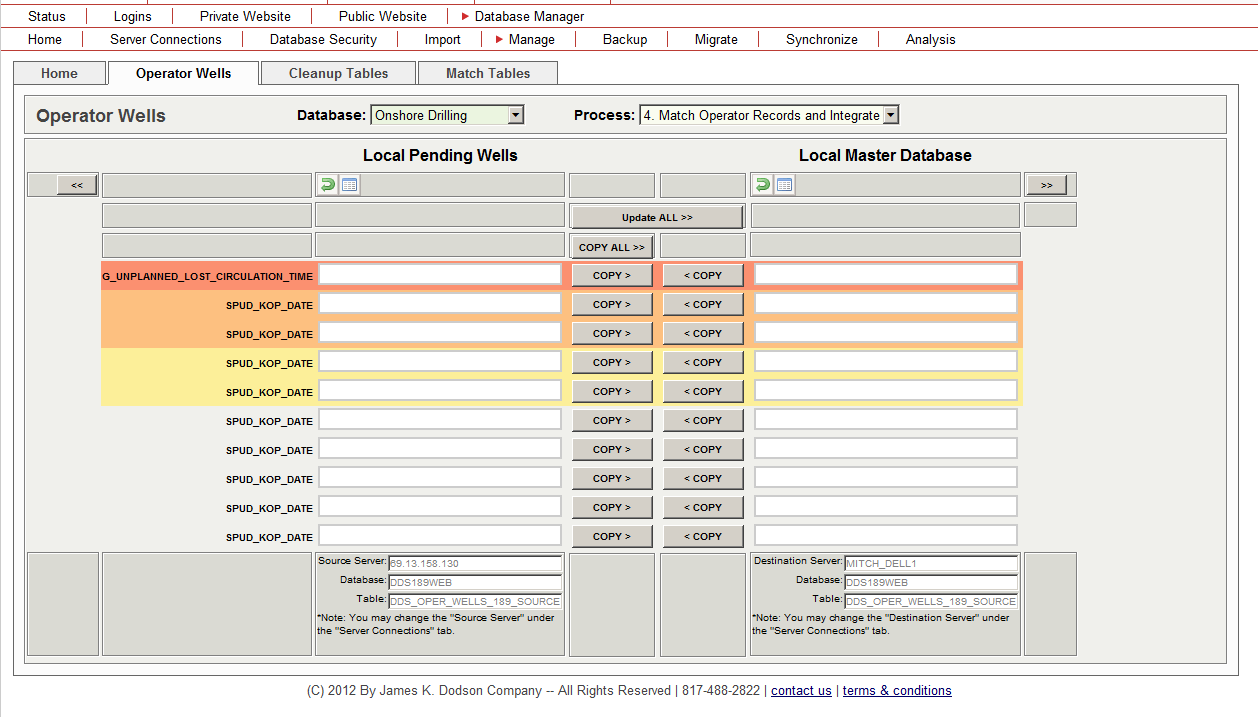

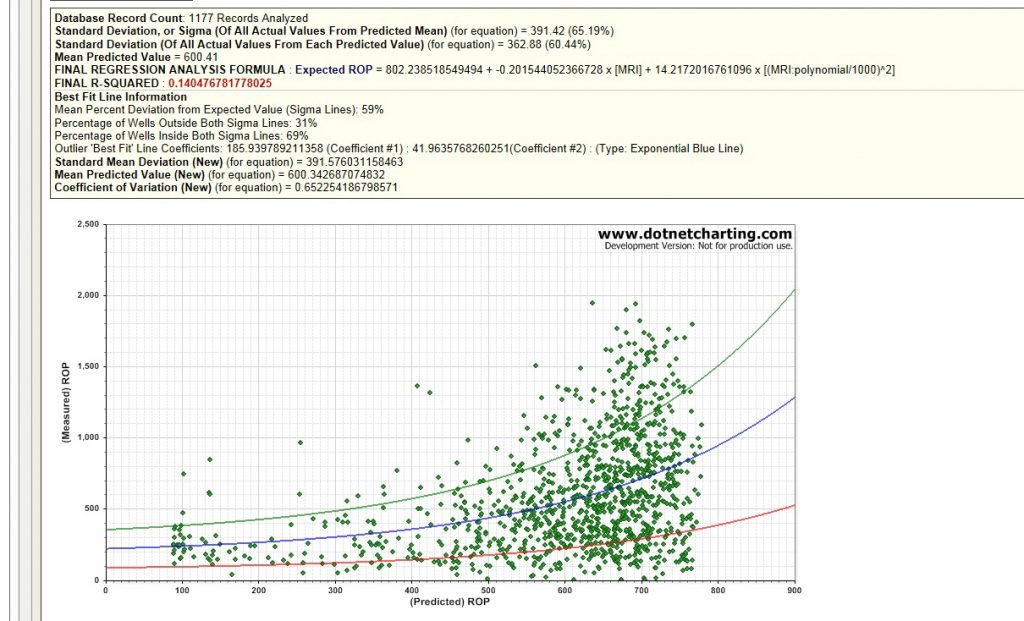

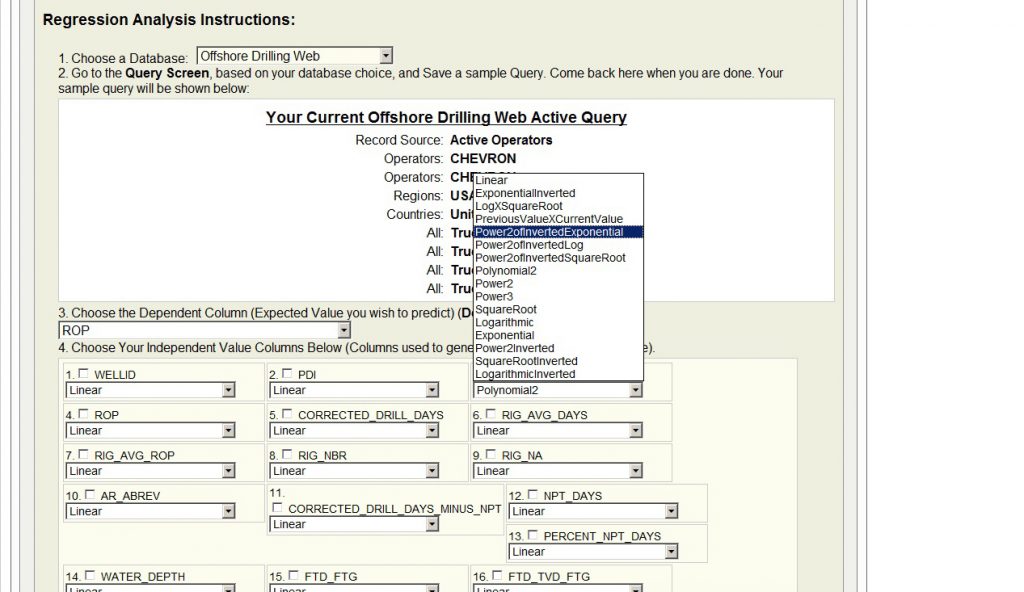

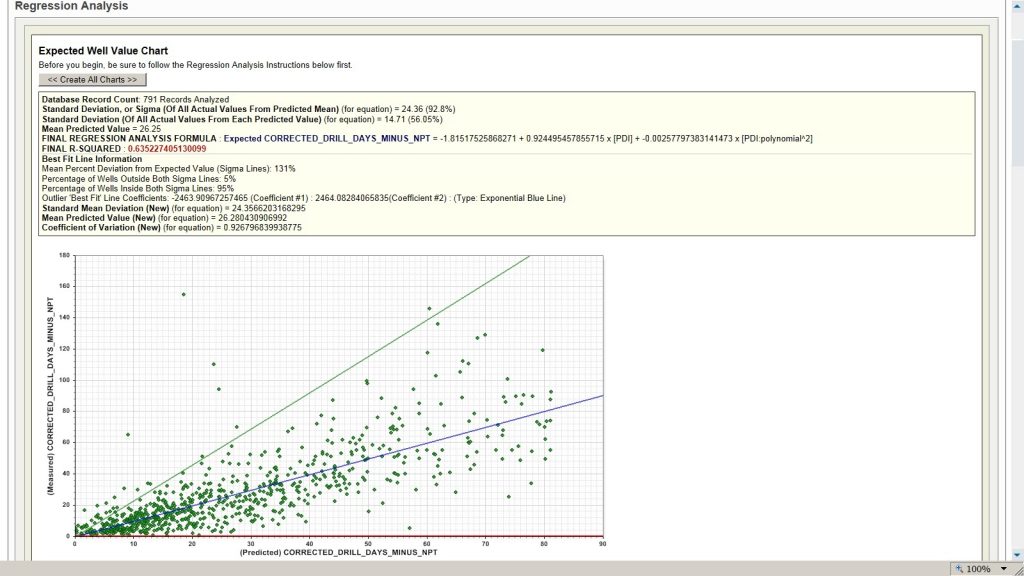

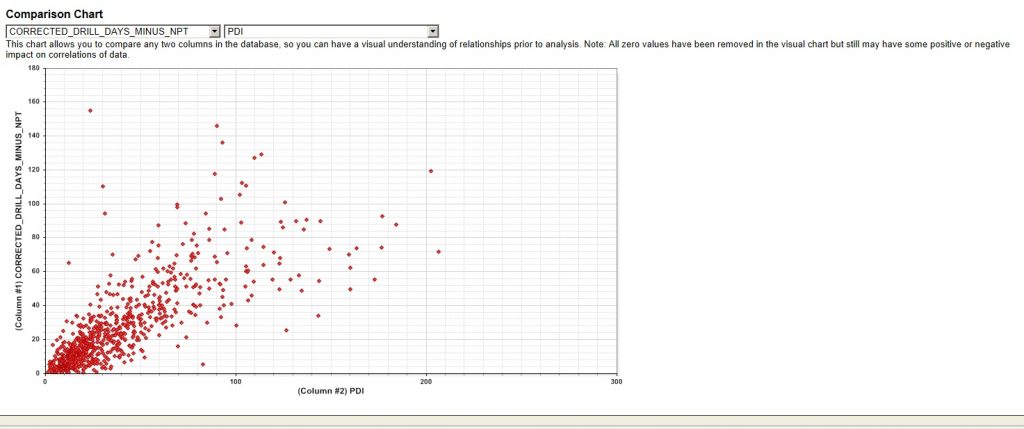

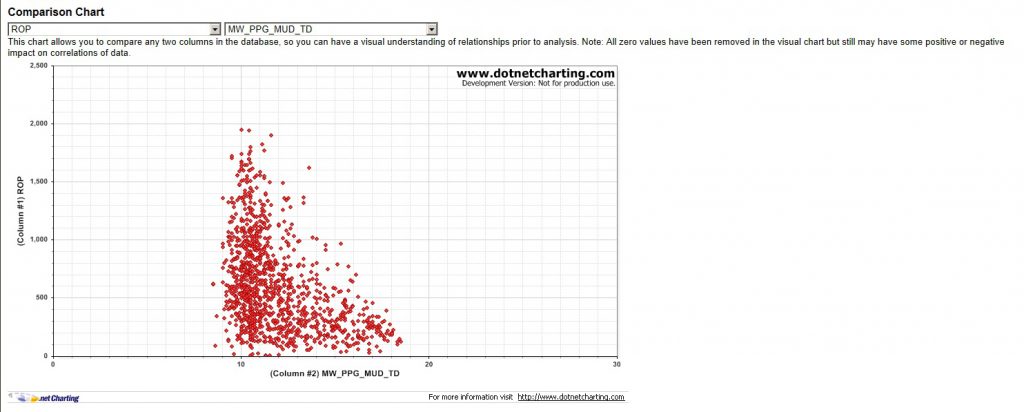

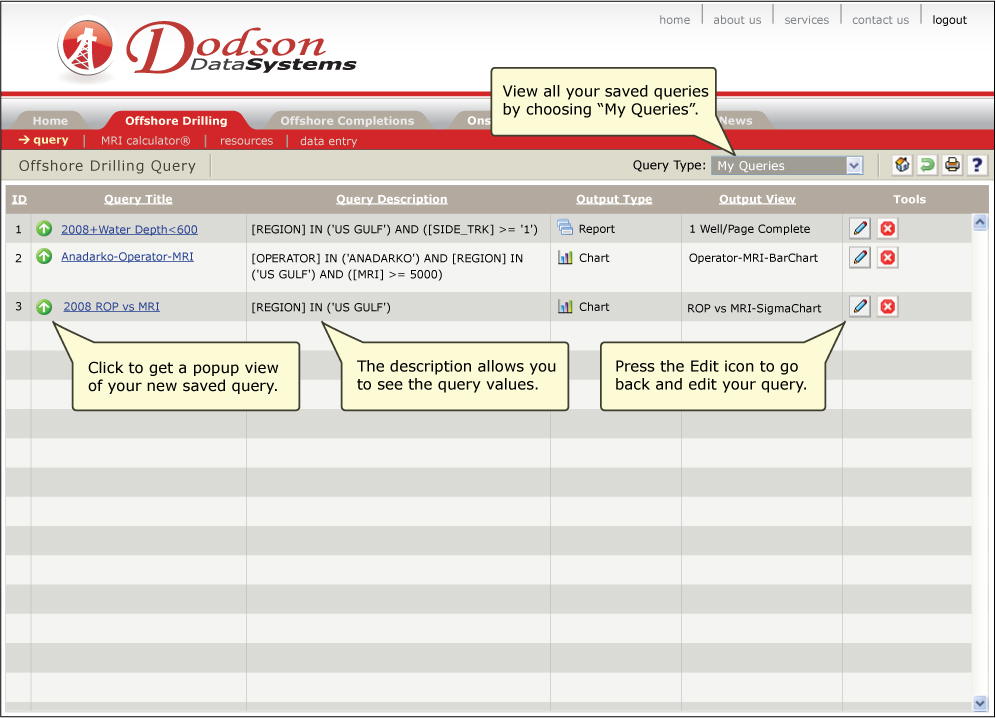

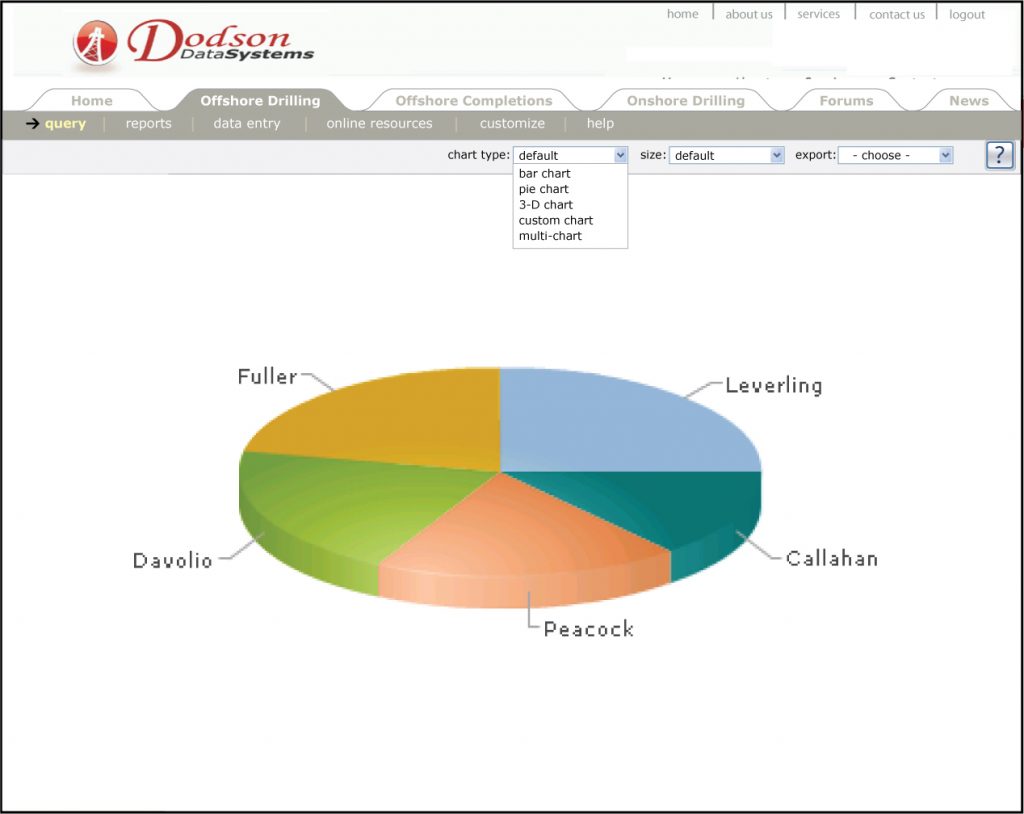

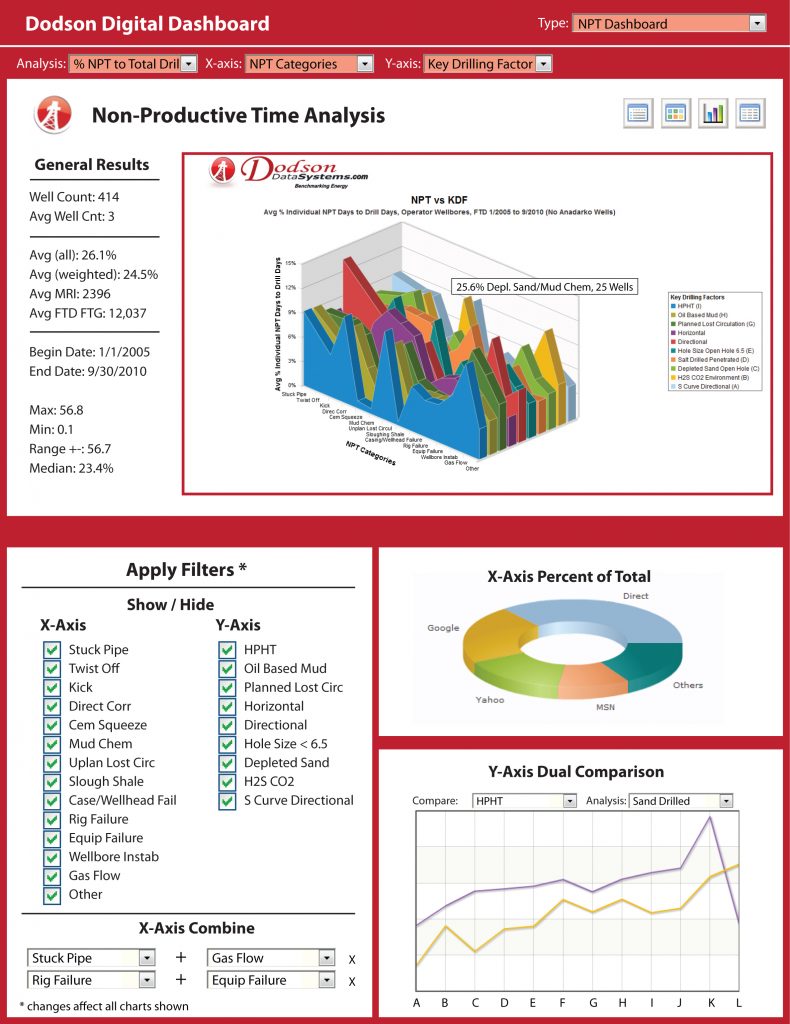

Most of the screen shots shown below include a very large data query application I built for the Energy Industry here in Texas. As the only developer on the project (yes, I am a full stack developer – HTML, C#.NET, and dynamic T-SQL) I was required to build the whole thing front-to-back from the ground up. The client I worked for needed a fully queriable web application where the SQL data and its analysis was accessible to the client. They wanted their oil and gas clients to then login and run graphs and analysis on the drilling data they and their peers had entered into the system. But it was the deeper analysis and benchmarking of the data against their peers that the drillers really needed. Many dollars had been spent before on a prior failed system, their clients still drilling wells onshore and offshore with no way to measure their efficiency relative to their competitors. Millions of dollars were at stake, as some offshore wells had cost over a million dollars a day to drill.

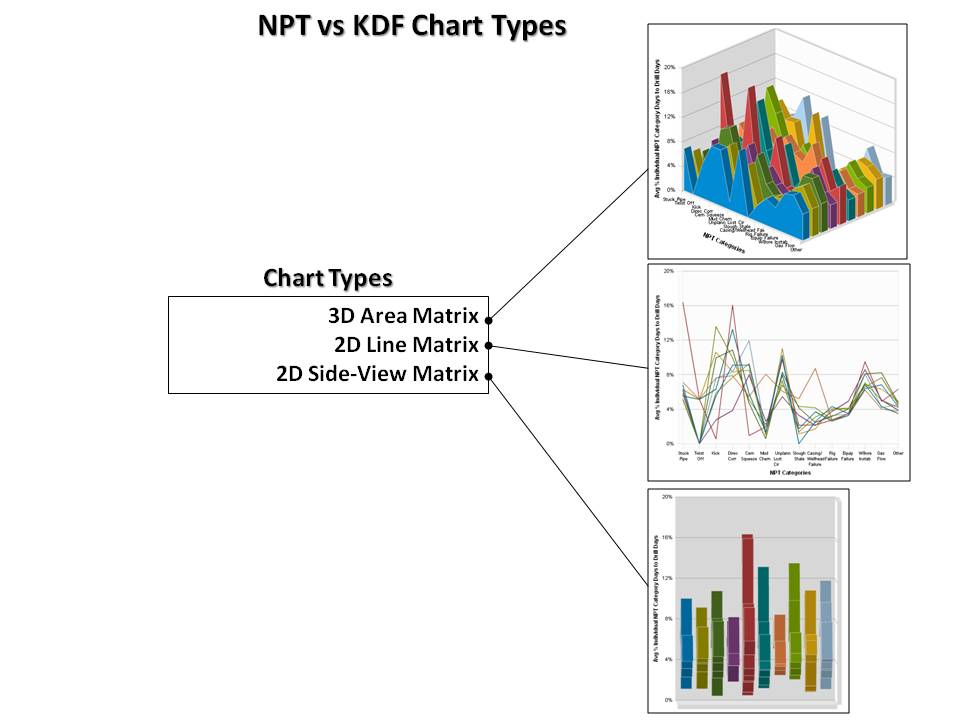

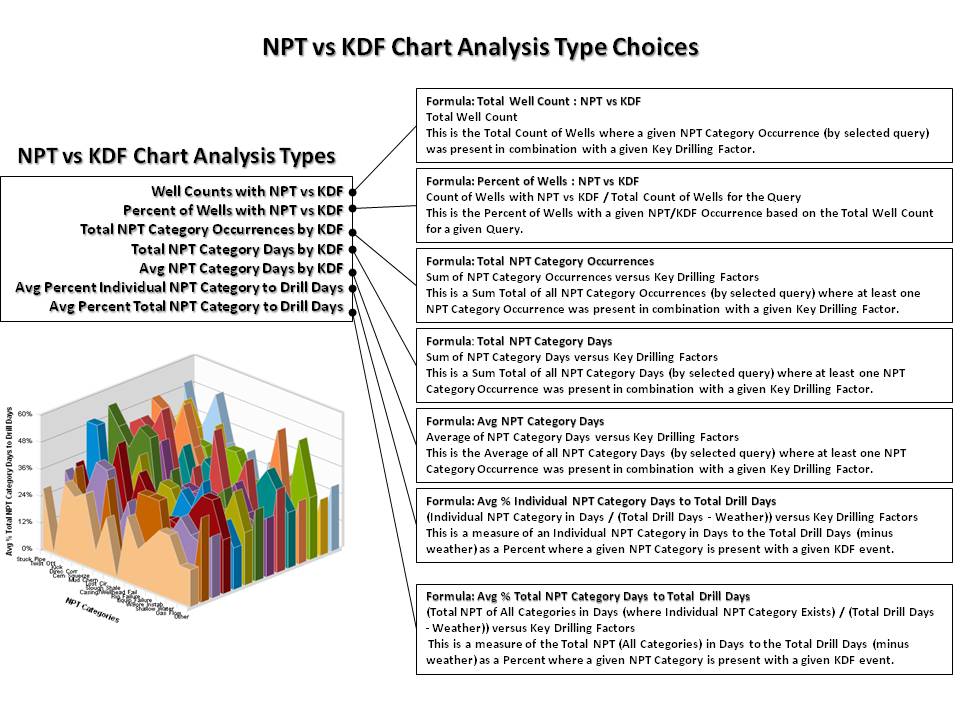

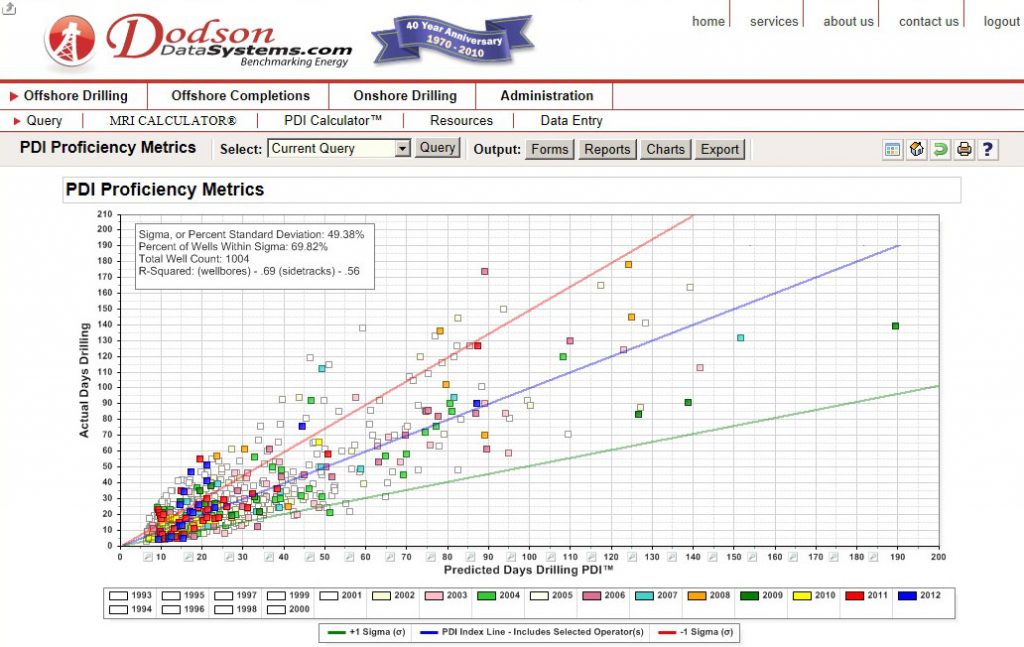

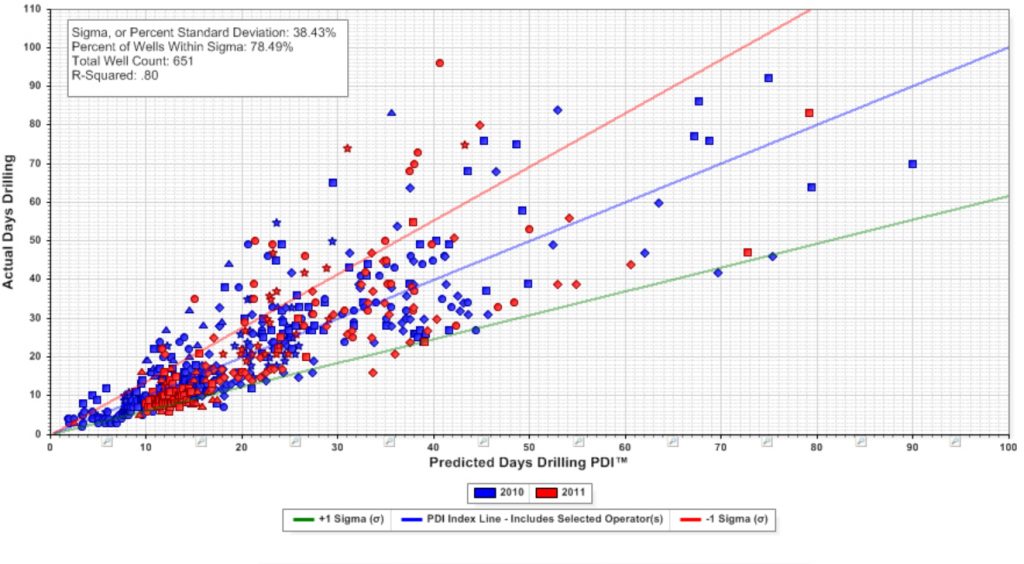

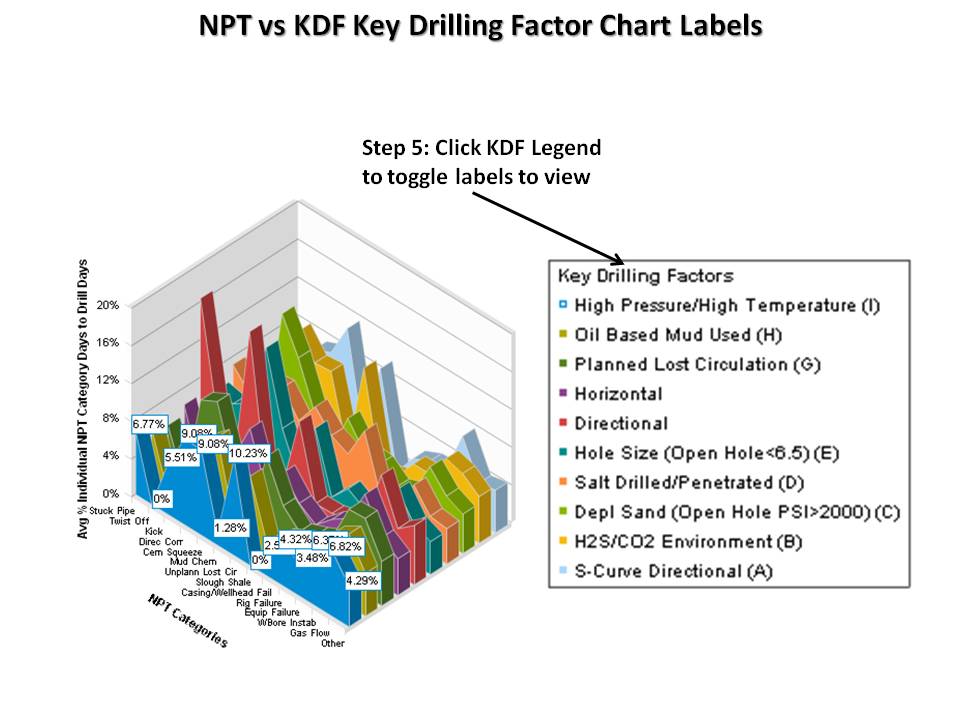

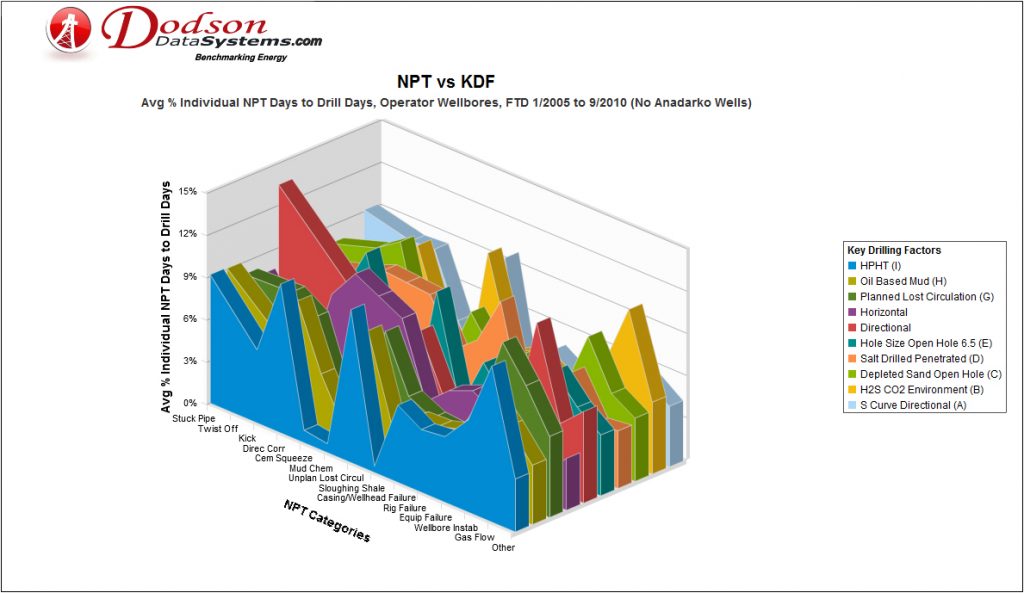

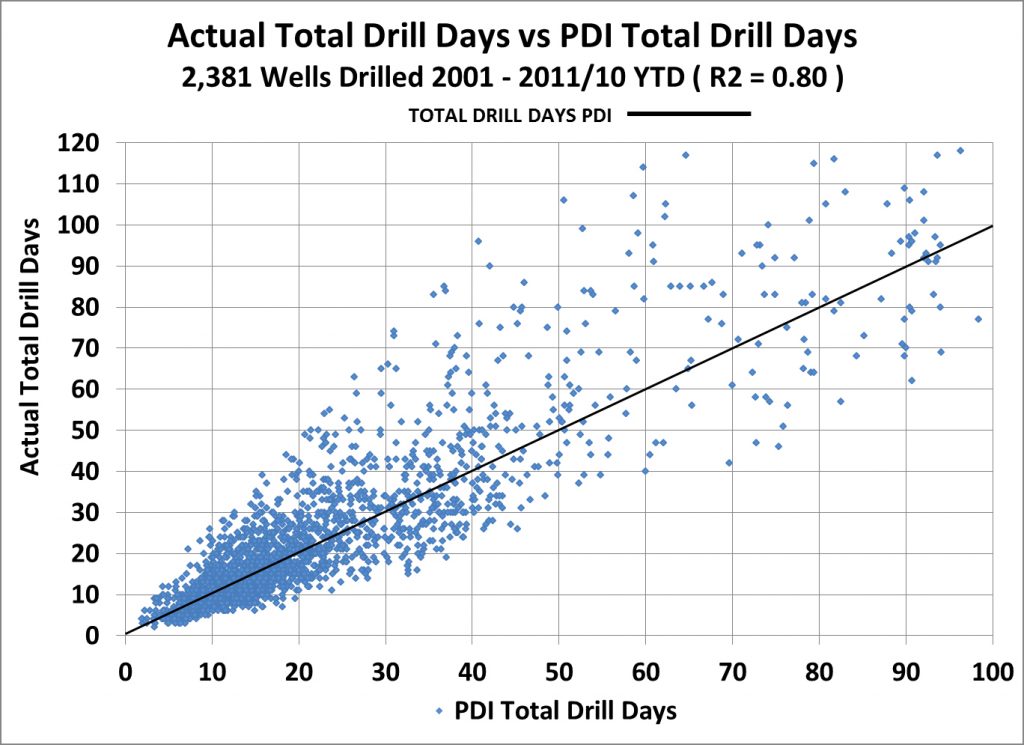

What was unique about this client and the solution I provided was the need for both input and output using a complex dynamic, rules-based SQL back-end in combination with an easy-to-use rich graphics and chart driven front-end. Combined with a complex security management system integrated into the whole, the final web application I constructed not only allowed for very granular, web form’s-based querying using a very simple interface, but also involved storage and retrieval of those queries, powerful XML-driven form field caching, unique 3-dimensional charting, and an incorporation of a new, highly predictive mathematical non-linear regression analysis tool. This latter analysis tool allowed me to generate fresh algorithms, coefficients, and complex mathematical modeling needed by the business to predict days to drill and rate of penetration rates for all of the client’s North American onshore and offshore oil and gas customers.

From that data analysis, using my new predictive web tool, the business was able to showcase best-in-class drilling performance and predicted modelling for all of their drilling operators and their wells. This included the Big Oil Drillers like Chevron, Shell, and Anadarko in Houston. Based on drilling variables determined in the web system analysis, the graphed results showed the model correlated better than past analysis with known rates of faster shale fracking penetration found in popular North American shale plays. As such the new system could predict such rates to a higher degree of accuracy that ever before. This new analysis by my software saved their clients millions of dollars, allowing the drillers to push ahead with proven bench marked best-practices they could now count on. All this was created by the data modelling and mathematics I generated from their harvested databases based on deep analysis of their often flawed well data.

As such, I consider this very complex and unique web application shown below the crown jewel of my web application work the past 15 years. The best aspects of usable information design, utility, usability, data analysis, online innovation, mathematical integration, and .NET C# best-practices architecture came into play in this one web application.